Thursday, 15 September 2022

Monday, 24 May 2021

Code smells: a look at a switch statement

G'day:

There was a section in last week's Working Code Podcast: Book Club #1 Clean Code by "Uncle Bob" Martin (pt2) where the team were discussing switch statements being a code smell to avoid in OOP (this is at about the 28min mark; I can't find an audio stream of it that I can deep-link to though). I didn't think they quite nailed their understanding of it (sorry team, I don't mean that to sound patronising), so afterwards I asked Namesake if it might be useful if I wrote an article on switch as a code smell. He confirmed that it might've been more a case of mis-articulation than not getting it, but I ought to go ahead anyhow. So I decided to give it some thought.

Coincidentally, I happened to be looking at some of Adam's own code in his Semaphore project, and something I was looking at the test for was… a switch statement. So I decided to think about that.

I stress I said I'd think about it because I'm def on the learning curve with all this stuff, and whilst I've seen some really smell switch statements, and they're obvious, I can't say that I can reason through a good solution to every switch I see. This is an exercise in learning and thinking for me.

Here's the method with the switch in it:

private boolean function ruleMathIsTrue(required any userAttributeValue, required string operator, required any ruleValue){

switch (arguments.operator){

case '=':

case '==':

return arguments.userAttributeValue == arguments.ruleValue;

case '!=':

return arguments.userAttributeValue != arguments.ruleValue;

case '<':

return arguments.userAttributeValue < arguments.ruleValue;

case '<=':

return arguments.userAttributeValue <= arguments.ruleValue;

case '>':

return arguments.userAttributeValue > arguments.ruleValue;

case '>=':

return arguments.userAttributeValue >= arguments.ruleValue;

case 'in':

return arrayFindNoCase(arguments.ruleValue, arguments.userAttributeValue) != 0;

default:

return false;

}

}

First up: this is not an egregious case at all. It's isolated in a private method rather than being dumped in the middle of some other logic, and that's excellent. The method is close enough to passing a check of the single-responsibility principle to me: it does combine both "which approach to take" with "and actually doing it", but it's a single - simple - expression each time, so that's cool.

What sticks out to me though is the repetition between the cases and the implementation:

They're mostly the same except the three edge-cases:

- = needs to map to ==;

- in, which needs a completely different sort of operation.

- Instead of just throwing an exception if an unsupported operator is used, it just goes "aah… let's just be false" (and return false and throwing an exception are both equally edge-cases anyhow).

This makes me itchy.

One thing I will say for Adam's code, and that helps me in this refactoring exercise, is that he's got good testing of this method, so I am safe to refactor stuff, and when the tests pass I know I'm all good.

My first attempt at refactoring this takes the approach that a switch can often be re-implemented as a map: each case is a key; and the payload of the case is just some handler. This kinda makes the method into a factory method (kinda):

operationMap = {

'=' : () => userAttributeValue == ruleValue,

'==' : () => userAttributeValue == ruleValue,

'!=' : () => userAttributeValue != ruleValue,

'<' : () => userAttributeValue < ruleValue,

'<=' : () => userAttributeValue <= ruleValue,

'>' : () => userAttributeValue > ruleValue,

'>=' : () => userAttributeValue >= ruleValue,

'in' : () => ruleValue.findNoCase(userAttributeValue) != 0

};

return operationMap.keyExists(operator) ? operationMap[operator]() : false

OK so I have a map - lovely - but it's still got the duplication in it, and it might be slightly clever, but it's not really as clear as the switch.

Next I try to get rid of the duplication by dealing with each actual case in a specific way:

operator = operator == "=" ? "==" : operator;

supportedComparisonOperators = ["==","!=","<","<=",">",">="];

if (supportedComparisonOperators.find(operator)) {

return evaluate("arguments.userAttributeValue #operator# arguments.ruleValue");

}

if (operator == "in") {

return arrayFindNoCase(arguments.ruleValue, arguments.userAttributeValue);

}

return false;

This works, and gets rid of the duplication, but it's way less clear than the switch. And I was laughing at myself by the time I wrote this:

operator = operator == "=" ? "==" : operator

I realised I could get rid of most of the duplication even in the switch statement:

switch (arguments.operator){

case "=":

operator = "=="

case '==':

case '!=':

case '<':

case '<=':

case '>':

case '>=':

return evaluate("arguments.userAttributeValue #operator# arguments.ruleValue");

case 'in':

return arrayFindNoCase(arguments.ruleValue, arguments.userAttributeValue) != 0;

default:

return false;

}

Plus I give myself bonus points for using evaluate in a non-rubbish situation. It's still a switch though, innit?

The last option I tried was a more actual polymorphic approach, but because I'm being lazy and CBA refactoring Adam's code to inject dependencies, and separate-out the factory from the implementations, it's not as nicely "single responsibility principle" as I'd like. Adam's method becomes this:

private boolean function ruleMathIsTrue(required any userAttributeValue, required string operator, required any ruleValue){

return new BinaryOperatorComparisonEvaluator().evaluate(userAttributeValue, operator, ruleValue)

}

I've taken the responsibility for how to deal with the operators out of the FlagService class, and put it into its own class. All Adam's class needs to do now is to inject something that implements the equivalent of this BinaryOperatorComparisonEvaluator.evaluate interface, and stop caring about how to deal with it. Just ask it to deal with it.

The implementation of BinaryOperatorComparisonEvaluator is a hybrid of what we had earlier:

component {

handlerMap = {

'=' : (operand1, operand2) => compareUsingOperator(operand1, operand2, "=="),

'==' : compareUsingOperator,

'!=' : compareUsingOperator,

'<' : compareUsingOperator,

'<=' : compareUsingOperator,

'>' : compareUsingOperator,

'>=' : compareUsingOperator,

'in' : inArray

}

function evaluate(operand1, operator, operand2) {

return handlerMap.keyExists(operator) ? handlerMap[operator](operand1, operand2, operator) : false

}

private function compareUsingOperator(operand1, operand2, operator) {

return evaluate("operand1 #operator# operand2")

}

private function inArray(operand1, operand2) {

return operand2.findNoCase(operand1) > 0

}

}

In a true polymorphic handling of this, instead of just mapping methods, the factory method / map would just give FlagService the correct object it needs to deal with the operator. But for the purposes of this exercise (and expedience), I'm hiding that away in the implementation of BinaryOperatorComparisonEvaluator itself. Just imagine compareUsingOperator and inArray are instances of specific classes, and you'll get the polymorphic idea. Even having the switch in here would be fine, because a factory method is one of the places where I think a switch is kinda legit.

One thing I do like about this handling is the "partial application" approach I'm taking to solve the = edge-case.

But do you know what? It's still not as clear as Adam's original switch. What I have enjoyed about this exercise is trying various different approaches to removing the smell, and all the things I tried had smells of their own, or - in the case of the last one - perhaps less smell, but the code just isn't as clear.

I'm hoping someone reading this goes "ah now, all you need to do is [this]" and comes up with a slicker solution.

I'm still going to look out for a different example of switch as a code smell. One of those situations where the switch is embedded in the middle of a block of code that then goes on to use the differing data each case prepares, and the code in each case being non-trivial. The extraction of those cases into separate methods in separate classes that all fulfil a relevant interface will make it clearer when to treat a switch as a smell, and solve it using polymorphism.

I think what we take from this is the knowledge that one ought not be too dogmatic about stamping out "smells" just cos some book says to. Definitely try the exercise (and definitely use TDD to write the first pass of your code so you can safely experiment with refactoring!), but if the end result ticks boxes for being "more pure", but it's at the same time less clear: know when to back out, and just run with the original. Minimum you'll be a better programmer for having taken yerself through the exercise.

Thanks to the Working Code Podcast crew for inspiring me to look at this, and particularly to Adam for letting me use his code as a discussion point.

Righto.

--

Adam

Monday, 26 April 2021

Change in look...

G'day:

I see my change in look has been leaked to the public:

I hope Adam Tuttle doesn't mind me copying his work.

This was taken from Top 30 Coldfusion RSS Feeds. I will touch base with them and ask them "WTF?"

Righto.

--

Adam

Sunday, 11 April 2021

TDD: eating the elephant one bite at a time

G'day:

I've got another interesting reader comment to address today. My namesake Adam Tuttle has sent through this wodge of questions, attached to my earlier article "TDD is not a testing strategy":

You weren't offering to teach anyone about TDD in this post, but hey... I'm here, you're here, I have questions... Shall we?

One of the things I struggle with w/r/t TDD is the temptation to test every. single. action. For example, a large, complex form-save. Dozens, possibly 100 fields. Whether that ends up saved via ORM entities or queries, chances are good that since the form is so large the logic is a bit beyond a dirt-simple single-CRUD query. Multiple relationships, order of operations, permissions to modify different fields, etc.

My gut reaction is to skip the unit-testing layer and jump up to an integration or E2E test: submit the form, then view the detail-view (or re-open the record for editing) and assert that the values changed have persisted and they are what you're expecting, where you're expecting it, on the latter view.

BUT doesn't that almost entirely eliminate the possibility of using mocks to make the tests fast(er), the base-state predictable, and to not leave a mess in a designated testing db/env? My (and I mean this literally!) feeble, bad-at-TDD brain doesn't comprehend what a good solution is to this problem.

Unless the solution is to not test that aspect of the code? I fully subscribe to the "100% test coverage is a fools errand" ethos, so perhaps this is something that should just not be tested; and save the testing for things that are doing "interesting" algorithmic work? (not-crud)

Since I specifically mentioned permission to edit a certain field in my example, I guess I should say that stands out to me as something I would likely want to test. Thinking about it now, my brain wants to architect a system that accepts the user object and the field name as inputs and returns a boolean for editable or not. Easy enough to implement and you're basically changing the conditional in the save method from "if user has X permission, entity.setProperty(newval)" to "if evaluatePermissions(user, property) = true, entity.setProperty(newval)", so there's no big mental leap to the next developer to read the code... but it also seems hairy to separate the permission logic from the form-save logic, not because of the separation, but because it leads towards combining the permission logic of lots of disparate and unrelated forms. I'm not seeing how that could be cleanly implemented.

So yeah. There's a can of worms for you. What do you make of that?

Nice one. There's a lot of work there, so I am going to approach it how I'd approach addressing any other requirements: a bit at a time. Like I'm doing TDD. Except I've NFI how I can write tests for a blog article, so just imagine that part. Also remember that TDD is not a testing strategy, it's a design strategy, so my TDD-ish approach here is focusing on identifying cases, and addressing them one at a time. OKOK, this is torturing my fixation with TDD a bit. Sorry.

You weren't offering to teach anyone about TDD in this post

You weren't offering to teach anyone about TDD in this post

. OK, so first point. I'm always open to excuses to think about TDD practices, and how we can use them to address our work. So don't worry abou that.

It needs to save a large form

For example, a large, complex form-save. Dozens, possibly 100 fields.

For my convenience I am going to interpret this as two separate things: the form, and the code that processes a post request. I suspect you were only meaning the latter. But, really, the same approach applies to both.

In seeing a large HTML form, you are not using a TDD mindset: how do I test that huge thing?! Using the TDD mindset, it's not a huge thing. It starts off being nothing. It starts off perhaps with "requests to /myForm.html return a 200-OK". From there it might move on to "It will be submitted as a POST to /processMyForm", and the to "after a successful form submission the user is redirected to /formSubmissionResults.html, and that request's status is 201-CREATED". Small steps. No form fields at all yet. But we have tested that requirements of your work have been tested (and implemented and pass the tests).

Next you might start addressing a form field requirement: "it has a text field with maximum length 100 for firstName". Quickly after that you have the same case for lastName. And then there might be 20 other fields that are all text and all have a sole constraint of maxLength, so you can test all of those really quicky but with still the same amount of care with a data provider that passes the test the case variations, but otherwise is the same test. This is still super quick, and your case still shows that you have addressed the requirement. And you can demonstrate that with your test output:

✓ should have a required text input for fullName, maxLength 100, and label 'Full name'

✓ should have a required text input for phoneNumber, maxLength 50, and label 'Phone number'

✓ should have a required text input for emailAddress, maxLength 320, and label 'Email address'

✓ should have a required password input for password, maxLength 255, and label 'Password'

✓ should have a required workshopsToAttend multiple-select box, with label 'Workshops to attend'

✓ should list the workshop options fetched from the back-end

✓ should have a button to submit the registration

- should leave the submit button disabled until the form is filled

7 passing (48ms)

1 pending

MOCHA Tests completed successfully

(I've lifted that from the blog article I mention lower down).

Not all form fields are so simple. Some need to be select boxes that source their data from [somewhere]. "It has a select for favouriteColour, which offers values returned from a call to /colours/?type=favourite". This needs better testing that just name and length. "It has a password field that only accepts [rules]". Definitely needs testing discrete from the other tests. Etc

Your form is a collection of form fields all of which will have stated requirements. If the requirements have been stated, it stands to reason you should demonstrate you've met the requirements. Both now in the first iteration of development, and that this continues to hold true during subsequent iterations (direct or indirect: basically new work doesn't break existing tests).

I cover an approach to this in article "Vue.js: using TDD to develop a data-entry form". It's a small form, but the technique scales.

Bottom line when using TDD you don't start with a massive form.

It's a similar story on the form submission handler. The TDD process doesn't start with "holy f**k 100 form fields!", it starts of with a POST request. or it might start with a controller method receiving a request object that represents that request. Each value in that request must have validation, and you must test that, because validation is a) critical, b) fiddly and error-prone. But you start with one field: firstName must exist, and must be between 1-100 character. You'd have these cases:

- It's not passed with the request at all (fail);

- It's passed with the request but its value is empty (fail);

- It's passed with the request and its length is 1 (pass);

- It's passed with the request and its length is 100 (pass);

- It's passed with the request and its length is 101 (fail);

These are requirements your client has given you: You need to test them!

The validation tests are perhaps a good example of where one might use a focused unit test, rather than a functional test that actually makes a request to /processMyForm and analyses the response. Maybe you just pass a request object, or the request body values to a validate method, and check the results.

Once validation is in place, you'd need to vary the response based on those results: "when validation fails it returns a 400-BAD-REQUEST"; "when validation fails it returns a non-empty-array errors with validation failure details", etc. All actual requirements you've been given; all need to be tested.

Then you'd move on to whatever other business logic is needed, step by step, until you get to a point where yer firing some values into storage or whatever, and you check the expected values for each field are passed to the right place in storage. Although I'd still use a mock (or spy, or whatever the precise term is), and just check what values it receives, rather than actually letting the test write to storage.

It also has end-to-end acceptance tests

At this point you can demonstrate the requirements have been tested, and you know they work. I'd then put an end-to-end happy path test on that (maybe all the way from automating the form submission with a virtual web client, maybe just by sending a POST request; either is valid). And then I'd do an end-to-end unhappy path test, eg: when validation fails are the correct messages put in the correct place on the form, or whatever. Maybe there are other valid variations of end-to-end tests here, but I would not think to have an end-to-end test for each form field, and each validation rule. That'd be fiddly to write, and slow to run.

It does need to cover all the behaviour

I fully subscribe to the "100% test coverage is a fools errand" ethos

. Steady on there. There's 100% and there's 100%. This notion is applied to lines-of-code, or 100% of methods, or basically implementation detail stuff. And it's also usually trotted out by someone who's looking at the code after it's been done, and is faced with a whole pile of testing to write and trying to work out ways of wriggling out of it. This is no slight on you, Adam (Tuttle), it's just how I have experienced devs rationalise this with me. If one does TDD / BDD, then one is not thinking about lines of code when one is testing. One is thinking about behaviour. And the behaviour has been requested by a client, and the behaviour needs to work. So we test the behaviour. Whether that's 1 line of code or 100 is irrelevant. However the test will exercise the code, because the code only ever came into existence to address the case / behaviour being delivered. Using TDD generally results in ~100% of the code being covered because you don't write code you don't need, which is the only time code might not be covered. How did that code get in there? Why did you write it? Obviously it's not needed so get rid of it ;-).

The key here is that 100% of behaviour gets covered.

Nothing is absolute though. There will be situations where some code - for whatever reason - is just not testable. This is rare, but it happens. In that case: don't get hung up by it. Isolate it away by itself, and mark it as not covered (eg in PHPUNit we have @codeCoverageIgnore), and move on. But be circumspect when making this decision, and the situations that one can't test some code is very rare. I find devs quite often seem to confuse "can't" with "don't feel like ~". Two different things ;-)

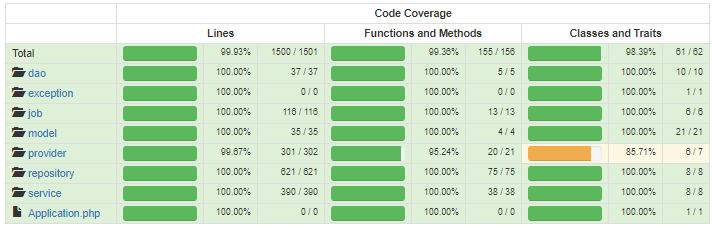

I'll also draw you back to an article I wrote ages ago about the benefits of 100% test coverage: "Yeah, you do want 100% test coverage". TL;DR: where in these two displays can you spot then new code that is accidentally missing test coverage:

Accidents are easy to spot when a previously all-green board starts being not all-green.

It uses emergent design to solve large problems

[My] brain wants to architect a system that [long and complicated description follows]

. One of the premises of TDD is that you let the solution architect itself. I'm not 100% behind this as I can't quite see it yet, but I know I do find it really daunting if my requirement seems to be "it all does everything I need it to do", and I don't know where to start with that. This was my real life experience doing that Vue.js stuff I linked to above. I really did start with "yikes this whole form thing is gonna be a monster!? I don't even know where to start!". I pushed the end result I thought I might have to the back of your mind, especially the architectural side of things (which will probably more define itself in the refactor stage of things, not the red / green part).

And I started by adding a route for the form, and then I responded to request to that route with a 200-OK. And then moved on to the next bite of the elephant.

HTH.

--

Adam (Cameron)

Thursday, 11 February 2021

Thoughts on Working Code podcast's Testing episode

G'day:

Working Code (@WorkingCodePod on Twitter) is a podcast by some friends and industry colleagues: Tim Cunningham, Carol Hamilton, Ben Nadel and Adam Tuttle.

(apologies for swiping your image without permission there, team)

It's an interesting take on a techo podcast, in their own words from their strapline:

Working Code is a technology podcast unlike all others. Instead of diving deep into specific technologies to learn them better, or focusing on soft-skills, this one is like hanging out together at the water cooler or in the hallway at a technical conference. Working Code celebrates the triumphs and fails of working as a developer, and aims to make your career in coding more enjoyable.

I think they achieve this, and it makes for a good listen.

So that's that, I just wanted to say they've done good work, and go listen.

…

Oh, just one more thing.

Yesterday they released their episode "Testing". I have to admit my reaction to a lot of what was said was… "poor", so I pinged my namesake and said "I have some feedback". After a brief discussion on Signal, Adam & I concluded that I might try to do a "reaction blog article" on the topic, and they might see if they can respond to the feedback, if warranted, at a later date. They are recording tonight apparently, and I'm gonna try to get this across to them for their morning coffee.

Firstly as a reminder: I'm pretty keen on testing, and I am also keen on TDD as a development practice. I've written a fair bit on unit testing and TDD both. I'm making a distinction between unit testing and TDD very deliberately. I'll come back to this. But anyway this is why I was very very interested to see what the team had to say about testing. Especially as I already knew one of them doesn't do automated testing (Ben), and another (Carol) I believe has only recently got into it (I think that's what she said, a coupla episodes ago). I did not know Adam or Tim's position on it.

And just before I get under way, I'll stick a coupla Twitter messages here I saw recently. At the time I saw them I was thinking about Ben's claim to a lack of testing, and they struck a particular chord with me.

I do not know Maaret or Mathias, but I think they're on the money here.

OK So I'm now listening to the podcast now. I'm going to pull quotes from it, and comment on them where I think it's "necessary".

[NB I'm exercising my right to reproduce small parts of the Working Code Podcast transcript here, as I'm doing so for the purposes of commentary / criticism]

Ahem.

Adam @ 11:27:

…up front we should acknowledge you know we're not testing experts. None of us [...] have been to like 'testing college'. […]There's a good chance we're going to get something wrong.

I think, in hindsight, this podcast needed this caveat to be made louder and clearer. To be blunt - and I don't think any would disagree with me here - all four are very far from being testing experts. Indeed one is even a testing naesayer. I think there's some dangerously ill-informed opinions being expressed as the podcast progresses, and as these are all people who are looked-up-to in their community, I think there's a risk people will take onboard what they say as "advice". Even if there's this caveat at the beginning. This might seem like a very picky thing to draw on, but perhaps I should have put it at then end of the article, after there's more context.

Carol @ 12:07:

Somebody find that monster already.

Hi guys.

Ben @ 12:41

I test nothing. And it's not like a philosophical approach to life, it's more just I'm not good at testing

Adam @ 13:09:

Clearly that's working out pretty well for you, you've got a good career going.

I hear this a bit. "I don't test and I get by just fine". This is pretty woolly thinking, and it's false logic people will jump on to justify why they don't do things. And Adam is just perpetuating the myth here. The problem with this rationalisation is demonstrated with an analogy of going on a journey without a map and just wandering aimlessly but you still (largely accidentally) arrive at your intended destination. In contrast had you used a map, you'd've been more efficient with your time and effort, and been able to progress even further on the next leg of your journey sooner. Ben's built a good career for himself. Undoubtedly. Who knows how much better it would be had he… used a map.

Also going back to Ben's comment about kind of explaining away why he doesn't test because he's not good at it. Everyone starts off not being good at testing mate. The rest of us do something about it. This is a disappointing attitude from someone as clued-up as Ben. Also if you don't know about something… don't talk about it mate. Inform yourself first and then talk about it.

Ben @ 13:21:

[…] some additional context. So - one - I work on a very small team. Two: all the people who work on my team are very very familiar with the software. Three: we will never ever hire a new engineer specifically for my team. Cos I work on the legacy codebase. The legacy codebase is in the process of being phased out. […] I am definitely in a context where I don't have to worry about hiring a new person and training the up on a system and then thinking they'll touch something in the code that they don't understand how it works. That's like the farthest possible thing from my day-to-day operations currently.

Um… so? I'm not being glib. You're still writing new logic, or altering existing logic. If you do that, it intrinsically needs testing. I mean you admitted you do manual testing, but it beggars belief that a person in the computer industry will favour manually performing a pre-defined repetitive task as a (prone-to-error) human, rather than automating this. We're in the business of automating repetitive tasks!

I'd also add that this would be a brilliant, low-risk, environment for you to get yourself up to speed with TDD and unit testing, and work towards the point where it's just (brain) muscle memory to work that way. And then you'll be all ready once you progress to more mission-critical / contemporary codebases.

Ben @ 14:42:

I can wrap my head around testing when it comes to testing a data workflow that is completely pure, meaning you have a function or you have a component that has functions and you give it some inputs and it generates some outputs. I can 100% wrap my head around testing that. And sometimes actually when I'm writing code that deals with something like that, even though I'm not writing tests per se, I might write a scratch file that instantiates that component and sends data to it and checks the output just during the development process that I don't have to load-up the whole application.

Ben. That's a unit test. You have written a unit test there. So why don't you put it in a test class instead of a scratch file, and - hey presto - you have a persistent test that will guard against that code's behaviour somehow changing to break those rules later on. You are doing the work here, you're just not doing it in a sensible fashion.

Ben @ 15:18:

Where it breaks down immediately for me is when I have to either a) involve a database, or b) involve a user interface. And I know that ther's all kinds of stuff that the industry has brought to cater to those problems. I've just never taken the time to learn.

There's a bit of a blur here between the previous train of thought which was definitely talking about unit tests of a unit of code, and now we're talking about end-to-end testing. These are two different things. I am not saying Ben doesn't realise this, but they're jammed up next to each other in the podcast so the distinction is not being made. These two kinds of testing are separate ideas, only really coupled by the fact they are both types of testing. Ben's right, the tooling is there, and - in my experience at least with the browser emulation stuff - it's pretty easy and almost fun to use. Ben already tests his stuff manually every time he does a release, so it would seem sensible to me to take the small amount of time it takes to get up to speed with these things, and then instead of testing something manually, take the time to automate the same testing, then it's taken care of thenceforth. It just a matter of being a bit more wise with one's time usage.

Adam @ 16:39

The reason that we don't have a whole lot of automated tests for our CFML code is simply performance. So when we started our product I tried really hard to do TDD. If I was writing a new module or a new section of that module I would work on tests along with that code, and would try to stay ahead of the game there. And what ended up happening was I had for me - let's say - 500 functions that could run, I had 400 tests. And I don't want to point a finger at any particular direction, but when you take the stack as a whole and you say "OK now run my test suite" and it takes ten minutes to run those tests and [my product, the project I was working on] is still in its infancy, and you can see this long road of so much more work that has to be done, and it takes ten minutes to run the tests - you know, early on - there was no way that that was going to be sustainable. So we kind of abandoned hope there. [...] I have, in more recent years, on a more recent stack seen way better performance of tests. [...] So we are starting to get more into automated testing and finding it actually really helpful. [...] I guess what I wanted to say there is that a perfectly valid reason to have fewer or no tests is if it doesn't work well on your platform.

Adam starts off well here, both in what he's saying and his historical efforts with tests, but he then goes on to pretty much blame ColdFusion for not being very good at running tests. This is just untrue, sorry mate. We had thousands upon thousands of tests running on ColdFusion, and they ran in an amount of time best measured in seconds. And when we ported that codebase to PHP, we had a similar number of test cases, and they ran in round about the same amount of time (PHP was faster, that said, but also the tests were better). I think the issue is here - and confirms this about 30sec after the quote above, and again about 15min later when he comes back to this - is that your tests weren't written so well, and they were not focused on the logic (as TDD-oriented tests ought to be), they were basically full integration tests. Full integration tests are excellent, but you don't want your tight red / green / refactor testing cycle to be slowed down by external services like databases. That's the wrong sort of testing there. My reaction to you saying your test runs are slow is not to say "ColdFusion's fault", it's to say "your tests' fault". And that's not a reason to not test. It's a reason to check what you've been doing, and fix it. I'm applying hindsight here for you obviously, but this ain't the conclusion/message you should be delivering here.

Carol @ 20:03:

I also want to say that if you are starting out and you're starting to add test, don't let slowness stop you from doing it.

Spot on. I hope Ben was listening there. When you start to learn something new, it is going to take more time. I think this is sometimes why people conclude that testing takes a lot of time: the people arriving at that conclusion are basing it on their time spent on the learning curve. Accept that things go slow when you are learning, but also accept that things will become second nature. And especially with writing automated tests it's not exactly rocket-science, the initial learning time is not that long. Just… decide to learn how to test stuff, start automating your testing, and stick at it.

Ben @ 21:24:

I was thinking about debugging incidents and getting a page in the middle of the night and having to jump on a call and you seeing the problem, and now you have to do a hotfix, and push a deployment in the middle of the night […]. And imagine having to sit there for 30 minutes for your tests to run just so you can push out a hotfix. Which I thought to myself: that would drive me crazy.

At this point I think Ben is just trying to invent excuses to justify to himself why he's right to eschew testing. I'm reminded of Maaret's Twitter message I included above. The subtext of Ben's here is that if one tests manually, then you're more flexible in what you can choose to re-test when you are hotfixing. Well obviously if you can make that call re manual tests, then you can make the same call with automated tests! So his position here is just specious. Doubly so because automated tests are intrinsically going to be faster than the equivalent manual tests to start with. Another thing I'll note that with this entire analogy: you've already got yourself in a shit situation by needing to hotfix stuff in the middle of the night. Are you really sure you want to be less diligent in how you implement that fix? In my experience that approach can lead to a second / third / fourth hotfix being needed in rapid succession. Hotfix situations are definitely ones of "work smarter, not faster".

Ben @ 21:56:

I'm wondering if there should be a test budget that you can have for your team where you like have "here is the largest amount of time we're willing to let testing block a deployment". And anything above that have to be tests that sit in an optional bucket where it's up to the developer to run them as they see fit, but isn't necessarily tests that would block deployment. I don't know if that's totally crazy.

Adam continues @ 22:36:

You have to figure out which tests are critical path, which ones are "must pass", and these ones are like "low risk areas" […] are the things I would look for to make optional.

Yep, OK there's some sense here, but I can't help thinking that we are talking about testing in this podcast, and we're spending our time inventing situations in which we're not gonna test. It all seems a bit inverted to me. How about instead you just do your testing and then if/when a situation arises you then deal with it. Instead of deciding there will be situations and justify to yourselves why you oughtn't test in the first place.

But I also have to wonder: why the perceived rush here? What's wrong with putting over 30min to test stuff if it "proves" thaty your work has maintained stability, and you'll be less likely to need that midnight hotfix. What percentage of the whole cycle time of feature request to delivery is that 30 minutes? Especially if taking the effort to write the tests in the first place will inately improve the stability of your code in the first place, and then help to keep it stable? It's a false economy.

Tim @ 23:15:

When we have contractors do work for us. I require unit tests. I require so much testing just because it's a way for me to validate the truth of what they're saying they've done. So that everything that we have that's done by third parties is very well tested, and it's fantastic because I have a high level of confidence.

Well: precisely. Why do you not want that same level of confidence in your in-house work? Like you say: confidence is fantastic. Be fantastic, Tim. Also: any leader should eat their own dogfood I think. If there's sense in you making the contractors work like this, clearly you ought to be working that way yourself.

Tim @ 23:36:

Any time I start a new project, if I have a greenfields project, I always start with some level of unit tests, and then I get so involved in the actual architecture of the system that I put it off, and like "well I don't really need a test for this", "I'm not really sure where I'm going with this, so I'm not going to write a test first" because I'm kinda experimenting. Then my experiment becomes reality, then my reality becomes the released version. And then it's like "well what's the point of writing a test now?"

I think we've all been there. I think what Tim needs here is just a bit more self-discipline in identifying what is "architectural spike" and what's "now doing the work". If one is doing TDD, then the spike can be used to identify the test cases (eg "it's going to need to capture their phone number") without necessarily writing the test to prove the phone number has been captured. So you write this:

describe("my new thing", function () {

it ("needs to capture the phone number", function () {

// @todo need to test this

});

});

And then when you detect you are not spiking any more, you write the test, and then introduce the code to make the test pass. I also think Tim is overlooking that the tests are not simply there for that first iteration, they are then there proving that code is stable for the rest of the life of the code. This… builds confidence.

Adam @ 24:22:

That's what testing is all about, right? It's increasing confidence that you can deploy this code and nothing is going to be wrong with it. […] When I think about testing, the pinnacle of testing for me is 100% confidence that I can deploy on my way out the door at 4:55pm on Friday afternoon, with [a high degree of ~] confidence that I am not going to get paged on Saturday at 4am because some of that code that I just deployed… it went "wrong".

Exactly.

Carol @ 25:12:

What difference between the team I'm on and the team you guys have is we have I think it's 15-ish people touching the exact same code daily. So a patch I can put out today may have not even been in the codebase they pulled yesterday when they started working on a bug, or a week ago when they had theirs. So me writing that little extra bit of test gives them some accountability for what I've done, and me some.

Again: exactly.

Ben @ 26:36:

Even if you have a huge test suite, I can't help but think you have to do the manual testing, because what if something critical was missed. [...] I think the exhaustive test suite, what that does is it catches unexpected bugs unrelated. Or things that broke because you didn't expect them to break in a certain way. And I think that's very important.

To Ben's first point, you could just as easily (and arguably more validly) switch that around: a human, doing ad-hoc manual testing is more likely to miss something, because every manual test run is at their whim and subject to their focus and attention at the time. Whereas the automated tests - which let's not forget were written by a diligent human, but right at the time they are most focused on the requirements - are run by the computer and it will do exactly the same job every time. What having the historical corpus of automated tests give you is increased confidence that all that stuff being tested still works the way it is supposed to, so the manual testing - which is always necessary, can be more a case of dotting the Is and crossing the Ts. With no automated tests, the manual tests need to be exhaustive. And the effort needs to be repeated every release (Adam mentions this a few minutes later as well).

To the second point: yeah precisely. Automated tests will pick up regressions. And the effort to do this only needs to be done once (writing the test). Without automated tests, you rely on the manual testing to pick this stuff up, but - being realistic - if your release is focused on PartX of the code, your manual tests are going to focus there, and possibly not bother to re-test PartZ which has just inadvertantly been broken by PartX's work.

Ben also mentions this quote from Rich Hickey "Q: What happened to every bug out there? A: it passed the type checker, and it passed all tests." (I found this reference on Google: Simple Made Easy, it's at about 15:45). It's a nifty quote, but what it's also saying is that there wasn't actually a test for the buggy behaviour. Because if there was one: the test would have caught it. The same could be said more readily of manual-only testing. Obviously nothing is going to be 100%, but automated tests are going to be more reliable at maintaining the same confidence level, and be less effort, than manual-only testing.

Ben @ 28:15:

When people say it increases the velocity of development over time. I have trouble embracing that.

(Ben's also alluding back to a comment he made immediately prior to that, relating to always needing to manually test anyhow). "Over time" is one of the keys here. Once a test is written once, it's there. It sticks around. Every subsequent test round there is no extra effort to test that element of the application (Tim draws attention to this a coupla minutes later too). With manual testing the effort needs to be duplicated every time you test. Surely this is not complicated to understand. Ben's point about "you still need to manually test" misses the point that if there's a foundation of automated tests, your manual testing can become far more perfunctory. Without the tests: the manual testing is monolithic. Every. Single. Time. To be honest though, I don't know why I need to point this out. It's a) obvious; and b) very well-trod ground. There's an entire industry that thinks automated tests are the foundation of testing. And then there's Ben who's "just not sure". This is like someone "just not sure" that the world isn't actually flat. It's no small amount of hubris on his part, if I'm honest. And obviously Ben is not the only person out there in the same situation. But he's the one here on this podcast supposedly discussing testing.

Ben @ 37:08:

One thing that I've never connected with: when I hear people talk about testing, there's this idea of being able to - I think they call them spies? - create these spies where you can see if private methods get called in certain ways. And I always think to myself: "why do you care about your private methods?" That's an implementation detail. That private method may not exist next week. Just care about what your public methods are returning and that should inherently test your private methods. And people have tried to explain it to me why actually sometimes you wanna know, but I've ust never understood it.

Yes good point. I can try to explain. I think there's some nuance missing in your understanding of what's going on, and what we're testing. It starts with your position that testing is only concerning itself with (my wording, paraphrasing you from earlier) "you're interested in what values a public method takes, and what it returns". Not quite. You care about given inputs to a unit, whether the behaviour within the unit correctly provides the expected outputs from the unit. The outputs might not be the return value. Think about a unit that takes a username and password, hashes the password, and saves it to the DB. We then return the new ID of the record. Now… we're less interested in the ID returned by the method, we are concerned that the hashing takes place correctly. There is an output boundary of this unit at the database interface. We don't want our tests to actually hit the database (too slow, as Adam found out), but we mock-out the DB connector or the DAO method being called that takes the value that the model layer has hashed. When then spy on the values passed to the DB boundary, and make sure it's worked OK. Something like this:

describe("my new thing", function () {

it ("hashes the password", function () {

testPassword = "letmein"

expectedHash = "whatevs"

myDAO = new Mock(DAO)

myDAO.insertRecord.should.be.passed(anything(), expectedHash)

myService = new Service(myDAO)

newId = myService.addUser("LOGIN_ID_NOT_TESTED", testPassword)

newId.should.be.integer() // not really that useful

});

});

class Service {

private dao

Service(dao) {

this.dao = dao

}

addUser(loginId, password) {

hashedPassword = excellentHashingFunction(password)

return this.dao.insertRecord(loginId, hashedPassword)

}

}

class DAO {

insertRecord(loginId, password) {

return db.insertQuery("INSERT INTO users (loginId, password) VALUES (:loginId, :password)", [loginId, password])

}

}

OK so insertRecord isn't a private method here, but the DAO is just an abstraction from the public interface of the unit anyhow, so it amounts to the same thing, and it makes my example clearer. insertRecord could be a private method of Service.

So the thing is that you are checking boundaries, not specifically method inputs/outputs.

Also, yes, the implementation of DAO might change tomorrow. But if we're doing TDD - and we should be - the tests will be updated at the same time. More often than not though, the implementation isn't as temporary as this line of thought often assumes (for the convenience of the argument, I suspect).

Adam @ 48:41:

The more that I learn how to test well, and the more that I write good tests, the more I become a believer in automated testing (Carol: Amen). […] The more I do it the better I get. And the better I get the more I appreciate what I can get from it.

Indeed.

Tim @ 49:32:

In a business I think that short term testing is a sunk cost maybe, but long term I have seen the benefit of it. Particularly whenever you are adding stuff to a mature system, those tests pay dividends later. They don't pay dividends now […] (well they don't pay as many dividends now) […] but they do pay dividends in the long run.

Also a good quote / mindset. Testing is about the subsequent rounds of development as much as the current one.

Ben @ 50:05:

One thing I've never connected with emotionally, when I hear people talk about testing, is when they refer to tests as providing documentation about how a feature is supposed to work. And as someone who has tried to look at tests to understand why something's not working, I have found that they provide no insight into how the feature is supposed to work. Or I guess I should say specifically they don't provide answers to the question that I have.

Different docs. They don't provide developer docs, but if following BDD practices, they can indicate the expected behaviour of the piece of functionality. Here's the test run from some tests I wrote recently:

> nodejs@1.0 test

> mocha test/**/*.js

Tests for Date methods functions

Tests Date.getLastDayOfMonth method

✓ returns Jan 31, given Jan 1

✓ returns Jan 31, given Jan 31

✓ returns Feb 28, given Feb 1 in 2021

✓ returns Feb 29, given Feb 1 in 2020

✓ returns Dec 31, given Dec 1

✓ returns Dec 31, given Dec 31

Tests Date.compare method

✓ returns -1 if d1 is before d2

✓ returns 1 if d1 is after d2

✓ returns 0 if d1 is the same d2

✓ returns 0 if d1 is the same d2 except for the time part

Tests Date.daysBetween method

✓ returns -1 if d1 is the day before d2

✓ returns 1 if d1 is the day after d2

✓ returns 0 if d1 is the same day as d2

✓ returns 0 if d1 is the same day as d2 except for the time part

Tests for addDays method

✓ works within a month

✓ works across the end of a month

✓ works across the end of the year

✓ works with zero

✓ works with negative numbers

Tests a method Reading.getEstimatesFromReadingsArray that returns an array of Readings representing month-end estimates for the input range of customer readings

Tests for validation cases

✓ should throw a RangeError if the readings array does not have at least two entries

✓ should not throw a RangeError if the readings array has at least two entries

✓ should throw a RangeError if the second date is not after the first date

Tests for returned estimation array cases

✓ should not include a final month-end reading in the estimates

✓ should return the estimate between two monthly readings

✓ should return three estimates between two reading dates with three missing estimates

✓ should return the integer part of the estimated reading

✓ should return all estimates between each pair of reading dates, for multiple reading dates

✓ should not return an estimate if there was an actual reading on that day

✓ should return an empty array if all readings are on the last day of the month

✓ tests a potential off-by-one scenario when the reading is the day before the end of the month

Tests for helper functions

Tests for Reading.getEstimationDatesBetweenDates method

✓ returns nothing when there are no estimates dates between the test dates

✓ correctly omits the first date if it is an estimation date

✓ correctly omits the second date if it is an estimation date

✓ correctly returns the last date of the month for all months between the dates

Test Timer

✓ handles a lap (100ms)

Test TimerViaPrototype

✓ handles a lap (100ms)

36 passing (221ms)

root@1b011f8852b1:/usr/share/nodeJs#

When I showed this to the person I was doing the work for, he immediately said "no, that test case is wrong, you have it around the wrong way", and they were right, and I fixed it. That's the documentation "they" are talking about.

Oh, and Carol goes on to confirm this very thing one minute later.

Also bear in mind that just cos a test could be written in such a way as to impart good clear information doesn't mean that all tests do. My experience with looking at open-source project's tests to get any clarity on things (and I include testing frameworks' own tests in this!), I am left knowing less than I did before I looked. It's almost like there's a rule in OSS projects that the code needs to be shite or they won't accept it ;-)

And that's it. It was an interesting podcast, but I really really strongly disagreed with most of what Ben said, and why he said it. It would be done thing if he was held to account (and the others tried this at times), but as it is other than joking that Ben is a nae-sayer, I think there's some dangerous content in here.

Oh, one last thing… in the outro the team suggests some resources for testing. Most of what the suggested seems to be "what to do", not "why you do it". I think the first thing one should do when considering testing is to read Test Driven Development by Kent Beck. Start with that. Oh this reminds me… not actually much discussion on TDD in this episode. TDD is tangential to testing per se, but it's an important topic. Maybe they can do another episode focusing on that.

Follow-up

The Working Code Podcast team have responded to my obersvations here in a subsequent podcast episode, which you can listen to here: 011: Listener Questions #1. Go have a listen.

Righto.

--

Adam

Saturday, 17 December 2016

That new Star Wars movie

If you need to be told that an article entitled "That new Star Wars movie" is perhaps going to discuss that new Star Wars movie, and intrinsically that's going to include details of the plot then... well here you go:

This contains spoilers.

I'll leave some space before I say anything else, in case that's not clear enough for you, and you think "oh, I hope what Cameron says here doesn't discuss that new Star Wars movie... I haven't seen it and I don't want it spoiled".

Oh for fuck's sake why do I do this to myself?

Firstly, Adam Tuttle is dead right on two counts:

@DAC_dev I don't believe for one second that you would let a Star Wars movie be released and not see it in theaters, regardless of reviews.— 🎄dam T🎁ttle (@AdamTuttle) December 17, 2016

This is true. I watched the trailers for this and though: "haha, nicely played: this doesn't look like crap!", and pretty much decided to see it on that basis. He followed up with this:

@DAC_dev it's more a situation of tradition and ritual at this point. New Star Trek movies too: they're hit or miss but I won't miss one.— 🎄dam T🎁ttle (@AdamTuttle) December 17, 2016

I'm the same. I've not actually enjoyed one of these movies since the third one. To save some confusion: I don't give a shit how Lucas decided to number these, or whether they're prequels, sequels or spin offs: I number them chronologically based on their release dates. In this case I mean: Return of the Jedi: the third one. I liked that one. I was 13 when I saw it (in 1983), and I've always had a soft spot for "space ships and laser guns" movies. And it was a good kids' movie. And I was a kid.

It demonstrates AdamT's point that I also knew what date this new one was being released (well: within a week or so of the date: "early Dec"), and I figured a coupla nights ago... I'd be in Galway on Saturday afternoon with two options:

- sit at the pub and write a blog article about consuming SOAP web services with PHP. Oh, and drink Guinness;

- or go to the local cinema and kill a coupla hours watching this movie first.

But anyway AdamT was right... there was some anticipation from me to get to see this latest Star Wars movie, and given - despite my best efforts - I don't actually enjoy them, I suspect it's just the nostalgia thing he mentioned.

So, yeah, I scooted down to the cinema and watched the movie. Interestingly there was only about another dozen people in the auditorium. I guess it's either not the draw "a Star Wars movie" used to have, or Saturday afternoon is not a popular time, or the Irish are too sensible to waste their time on such nonsense. I like to think it's the latter.

In case you don't know, this one is the story about how the goodies got hold of the Death Star plans just before the beginning of the first movie, and got them to Princess Leia and R2D2 and what not, and off they went to be chased by that Star Destroyer, and the rest of Star Wars goes from there.

Here's the problem with this new one from the outset: we already know the goodies in this movie succeed in their efforts to steal the plans, and we also know that they all die. Hey I told you there was going to be spoilers. Why did we know they'd all be dead at the end of this one? Well if they weren't they'd still be around for Star Wars etc, wouldn't they?

As a sidebar I am a big fan of the movie Alien, and before I developed a sense for decent movie writing, I also really liked Aliens. I always thought it'd be way cool if there was a linking story covering the period at the LV426 colony before they all got wiped out (some scenes of this made it into the extended mix of Aliens)... showing the colonists being overwhelmed, and only two of them being alive at the end. But even then it occurred to me the denouement wouldn't work as we already know what it would be.

Same here with this movie: Felicity Jones (I've no idea what her character name was: it didn't matter) was always gonna end up dead. And that other geezer she was with. Dead. Along with all the red shirts they were with. Well obviously they were gonna end up dead: they were only making up the numbers (this includes the yeah-we-get-it-it's-The-Force blind dude and his... brother? Pal? Who knows? Who cares?). This was, accordingly, a movie without any real overarching sense of drama.

One thing I did like about this movie was all the nods to the earlier movies there were. I'd usually think this would be self-indulgement / self-knowing / "Joss-Whedon-esque" sort of movie making, but hey, this thing is a nostalgia exercise more than anything else, so why not. I mention this cos I chuckled when they did indeed open with a triangular thing coming down from the top of the screen - eg: the Star Destroyer in the first movie - but this time it wasn't some big spaceship, it was a visual illusion of the way a planet's rings were in the planet's shadow (you'll need to see the scene to get what I mean). On the whole these things were inconsequential but I spotted a few, and they made me chuckle.

I was surprised to see Mads Mikkelsen in this (slumming it slightly, IMO), and he delivered his lines well and seemed convincing in his role... although it was a pretty small if pivotal one. I mention this because most of the rest of the acting was either pretty bland, or the players were the victim of pretty turgid writing (I was reminded of Harrison Ford's quote "George, you can type this shit, but you can't say it!". This was alive and well in this movie too, despite Lucas having nothing to do with it).

Forest Whitaker was a prime example of victimised actor here. His lines were so awful even he couldn't save them. I actually wonder if there was more material for his character originally which was excised, cos I really don't see why he was in the movie.

Felicity Jones was OK, but in comparing her to... ooh... [thinks]... Daisy Ridley in the preceding movie, who I though "hey, you've made a good character here!", I didn't get the same reaction. But she was completely OK, and one of the few people not over-egging their performances.

The comedy robot sidekick was better than usual, but it was still a comedy robot sidekick. It's interesting though that - on reflection - it was probably the second-most-rounded-out character after Jones's one. More so than all the other humans.

On the whole the script was dire. Uncharacteristically for me I found myself repeatedly muttered "for fuck's sake: really? Did you really just make the poor actor utter that line?" But I have to realise it's aimed at people with limited attention spans, and limited... well... age. Be that chronologically or... well... ahem.

What's with the capes? Why did that dude... the baddy guy who wasn't Tarkin or Darth Vader... wear a uniform which otherwise would never have a cape (none of the other dudes with the same uniform had one), had a cape basically clipped on to it. Who the fuck has worn a cape since the Edwardian era?? I never understood that about the likes of Batman or Superman either. Fucking daft.

But actually that baddy guy wasn't too badly drawn and portrayed either. He didn't seem "one note evil" like CGI Cushing or Darth Vader. Vader with his cape. Fuckin' dick.

(later update: shit it was Ben Mendelsohn. Fair enough he did a decent job then. Thankfully his script wasn't as bad as Whitaker's)

The visual composition of the thing was impressive, as one would expect from one of these movies. I think they overdid the "huge impressive but strangely odd design for the given situation" buildings a bit. And it was clear there was a design session of "we need new environments... these things always have new environments... I know: rain! Let's do rain! We've not done rain before! Oh and Fiji too. Let's make one of the planets look like Fiji: we've not done that before either". Still: it all looked impressive. It also pretty much looked real too, which is an improvement on some of its predecessors.

There was also a lot of "exciting" action set-pieces, except for the fact they're not exciting at all, because we all know that the action bits during the body of the movie will only ever serve to maintain the attention-span of the viewers between sections of exposition or travel to the next set piece, and nothing really important will happen during them. Like key characters being killed or anything. Just the red shirts (or white-suited storm-troopers in this case).

All I could think in the final space battle thing was that - once again - they've committed too many resources to this thing: there's not a few TIE Fighters, there's a bloody million of them, so obviously any of that action is not going to actually contribute to the plot, as there's no way the goodies can realistically beat them ship to ship, so something else needs to happen. So: no drama, and might as well not bother. It doesn't even look impressive as lots of small things whizzing around the place is not impressive. So it's just a matter of sitting there going "oh just get on with it, FFS. Get back to the plot".

I also thought the people in the Star Wars technical design dept should talk to the ones from the Battlestar Galactica one. Those were capital ships. None of this "we'll fire a coupla laser beams at you every few seconds", but "we'll throw up a wall of lead and fire, and small craft just ain't getting through it". Star Wars capital ships just aren't impressive. Oh, OK, I did like the way they finally got rid of the shield gateway thingey though. That was cool. It also reminded me of the scene in RotJ when one of the star destroyers lost control, and crashed into the surface of the Death Star.

Speaking of which: why was there no mention of the fact they were building two Death Stars? Those things would take ten years to build, so they were clearly both underway at the same time.

I did think "ooh shit, now yer fucked" when the AT-ATs showed up at the beach battle. Although obviously they were just gonna get destroyed or just not matter anyhow. I grant some of the ways they were destroyed struck a chord, especially in contrast to their seemingly imperviousness (is that a word?) in The Empire Strikes Back. The rendering of them made them look solid and foreboding though. Completely impractical, but quite foreboding.

How come only one person in the movie had a fully-automatic weapon? And why did it have a slide action (like a pump-action shotgun) which occasionally needed using?

What was with that computer of theirs? At the end when they were trying to get the plans. why did it need a manually controlled thingey to find the hard drive that they were looking for? I realise it was a plot device to slow things down a bit so the baddie could get there for that final showdown, but is that the best they could come up with? Shitty, lazy-arse writing.

Why was the controller console for the satellite dish way out there at the end of that catwalk?

Why did the Death Star miss from that range? I mean other than a setup so that the two goodies weren't instantly vaporised, instead giving them a moment to be reunited and have a wee hug (that was telegraphed too. Sigh) before being all tsunami-ed.

Why the fuck do I keep going to these movies?

In the end, I'd rate this movie as follows:

- visually impressive in a vapid way;

- not bad for a Star Wars movie. Probably the "best" one since RotJ;

- but let's make it clear: that's damning it with faint praise. This is an intellectually barren movie, aimed at kids (at least psychologically, if not chronologically). In that I know adults that actually like this shit, it's just further proof of the infantilisation of our culture, and at that I despair.

- I'll give it 6/10 mostly cos it does indeed achieve what it sets out to do... I'm just not the right audience for it. But seeing the kiddies waiting outside for the next session all excited made me remember what it was like when I first went to Star Wars, aged eight, 38 years ago.

- If I was a kiddie, it'd be an 8/10, I reckon. It's a bit bleak for a kiddie though, as they won't understand all the rest of the story kicks-off from the end of this one. That and that pretty much everyone of note in the movie dies. But at least they wouldn't notice how fucking stupid and bad almost all of the human-element of the movie was.

Oh... I thought the very ending was good: getting the plans to Leia's spaceship and off they went... 5min later for the Star Wars plot to start. That was all right.

Right. Another Guinness and I better try to find 1000-odd words to write about SOAP.

Sorry for the off-topic shite, but writing this all down here will save me some Twitter conversations about it. And, hey, it's possible click bait ;-)

Righto.

--

Adam

Tuesday, 19 January 2016

Floating point arithmetic with decimals

As a human... what is the value of

z, after you process this pseudocode with your wetware:x = 17.76

y = 100

z = x * y

Hopefully you'd say "1776". It was not a trick question.

And that's an integer, right? Correct.

CFML

Now... try this CFML code:x = 17.76;

y = 100;

z = x*y;

writeOutput(z);

1776

So far so good.But what about this:

writeOutput(isValid("integer", z));

You might think

"YES" (or true if yer on Lucee), however it's "NO".And this is where young players fall into the trap. They get all annoyed with

isValid() getting it wrong, etc. Which, to be fair, is a reasonable assumption with isValid(), but it's not correct in this instance. It's the young player who is mistaken.If we now do this:

writeOutput(z.getClass().getName());

We get:

java.lang.DoubleOK, but 1776 can be a Double, sure. But CFML should still consider a Double 1776 as a valid integer, as it should be able to be treated like one. So why doesn't it? What if we circumvent CFML, and go straight to Java:

writeOutput(z.toString());

1776.0000000000002

Boom. Floating point arithmetic inaccuracy.

Never ever ever forget, everyone... when you multiply floating point numbers with decimals... you will get "unexpected" (but you should pretty much expect it!) floating point accuracy issues. This is for the perennial reason that what's easy for us to express in decimal is actually quite hard for a computer to translate into binary accurately.

Aside: we were chatting about all this on the CFML Slack channel this morning, and one person asked "OK, so how come 17.75 x 100 works and 17.76 x 100 does not?". This is because a computer can represent 0.75 in binary exactly (2-1 + 2-2), whereas 0.76 can only be approximated, hence causing the "issue".

The problem really is that CFML should simply output

1776.0000000000002 when we ask it, and it should not try to be clever and hide this stuff. Because it's significant information. Then when the young player output the value, they'd go "oh yeah, better round that" or whatever they need to do before proceeding. CFML is not helping here.This is pretty ubiquitous in programming. Let's have a trawl through the various languages I can write the simplest of code in:

JavaScript

x = 17.76;

y = 100;

z = x * y

console.log(z);

1776.0000000000002

>

JS just does what it's told. Unsurprisingly.

Groovy

x = 17.76

y = 100

z = x * y

println "x * y: " + z

println "x: " + x.getClass().getName()

println "y: " + y.getClass().getName()

println "z: " + z.getClass().getName()

println "z: " + z.toString()

x * y: 1776.00

x: java.math.BigDecimal

y: java.lang.Integer

z: java.math.BigDecimal

z: 1776.00

>

This is interesting. Whilst Groovy keeps the result as a float (specifically a BigDecimal) - which is correct - it truncates it to the total number of decimal places expressed in its factors. That's how I was taught to do it in Physics at school, so I like this. This second example makes it more clear:

x = 3.30

y = 7.70

z = x * y

println "x * y: " + z

println "x: " + x.getClass().getName()

println "y: " + y.getClass().getName()

println "z: " + z.getClass().getName()

println "z: " + z.toString()

x * y: 25.4100

x: java.math.BigDecimal

y: java.math.BigDecimal

z: java.math.BigDecimal

z: 25.4100

>

In

3.30 and 7.70 there are four decimal places expressed (ie: two for each factor), so Groovy maintains that accuracy. Nice!Java

import java.math.BigDecimal;

class JavaVersion {

public static void main(String[] args){

double x = 17.76;

int y = 100;

System.out.println(x*y);

BigDecimal x2 = new BigDecimal(17.76);

BigDecimal y2 = new BigDecimal(100);

System.out.println(x2.multiply(y2));

}

}

Here I added a different variation because I was trying to see why the Groovy code behaved the way it did, but it didn't answer my question. I suspected that perhaps it was a BigDecimal thing how it decided on the accuracy of the result, but it wasn't:

1776.0000000000002

1776.000000000000156319401867222040891647338867187500

>

This is a good demonstration of how a simply base-10 decimal fraction is actually an irrational number in binary.

Sunday, 18 January 2015

REST Web APIs: The Book... competition winner

A few weeks ago I ran a "competition" to win a copy of Adam Tuttle's "REST Web APIs: The Book" book.

I have to admit that it'd slipped my mind until I was reminded about it a coupla days back.

In a very partial (sic) way I have selected a winner at not-at-all-random...

Tuesday, 23 December 2014

Book review: REST Web APIs: The Book (win a copy here)

If you're a CFML user, you probably already know that one of the cornerstone members of the CFML community - Adam Tuttle - has recently written a book "REST Web APIs: The Book". I had the privilege of being one of the pre-release reviewers - from a content and language perspective - and Adam has now asked me if I could flesh out my perceptions a bit, as a book review. I've never been asked to do a book review before. Blimey.

Thursday, 18 December 2014

Book review: Adam Tuttle's newest book

Adam's been busy recently. You'll've heard about his new book "

I know I have derided CFClient a lot, but it does have its good bits, and Adam has worked through them all and put a fairly accessible book together. Granted it's not very long, but for something that focuses on a single tag, I think that's fair enough. It's free and open source, so you should go get it, and give it a read. It might make you think again about whether or not to use CFClient. I have to admit it did give me pause for thought.

Go grab it from it's official website now (it's just a PDF): "CFClient The Good Parts".

I think Abram Adams (of trycf.com fame) said it best in his review when he said:

I felt like I was looking into the minds of Adobe engineersIt's exactly this attention to detail Adam Tuttle captures in this latest book.

CFML doyen Mark Drew had this to say:

I have been looking everywhere for an in-depth look at the useful features of the cfclient tag and I have to thank Mr Tuttle for providing it!

I am not good at reviews but I should say that this is not only The Good Parts, it is also the definitive guide.

5/5

I'll get back to you about the REST book next week (here it is: "Book review: REST Web APIs: The Book (win a copy here)"). The CFClient book'll keep you going until then.

Cheers.

--

Adam

Saturday, 18 October 2014

TickintheBox

I watched a good amount of bickering between two fellas who should know better last night:

@AdamTuttle @bdw429s fellas.

— Adam Cameron (@DAC_dev) October 17, 2014

(click through and read the "conversation" if you want to. I'll not reproduce it here as it's a bit embarrassing for the participants, I think).

Also, I "published" some misinformation of my own about CommandBox a day or so ago (as a comment on Cutter's blog: "What's Wrong With ColdFusion - 2014 Edition"). So I figured I owed to Brad and Luis to actually have a look at CommandBox (which I do like the sound of, see "Ortus does what Adobe / Railo ought to have done...").

Saturday, 11 October 2014

If you're missing the @CFMLNotifier feed: @CFNotifications has picked up the slack

SSIA, really. Adam Tuttle just asked if I still did the @CFMLNotifier Twitter feed; I do not.

But there's the @CFNotifications feed which covers the same ground, and does a more polished job of it than I ever did. This new feed is managed by Stephen Walker, of @cfuser fame.

Wednesday, 3 September 2014

Wrong wrong wrong, Cameron is wrong

Adam Tuttle and I were talking on IRC about some of my code today - in the context of closure - and I brashly asserted the code might implement closure, but it didn't actually use it, so it was a bad example of closure in action (like, admittedly, almost all examples people use when demonstrating it). I further posited I could simply use declared functions instead of inline function expressions and the code would still work, thus demonstrating my case.

TL;DR: I was wrong about that. But it doesn't sit entirely well with me, so here's the code.

Friday, 8 August 2014

CFML: <cfcatch>: my ignorance is reduced. Over a decade after it should have

Update:

I had to take this article down for a few hours as I ballsed up both the code and the analysis! Thanks to Adam Tuttle for noticing (or making me revisit it so I noticed it, anyhow).It pleases me when I learn something I didn't know about fundamental parts of CFML. I temporarily feel daft, but I'm used to that.

Ray - amidst a fiery exchange of disagreement last night - set me straight on a feature of CFML's exception-handling that I was completely unaware of. Despite it being well documented. Since ColdFusion 4.5. Cool!

I had never noticed this from the

<cfcatch> docs:The custom_type type is a developer-defined type specified in a cfthrow tag. If you define a custom type as a series of strings concatenated by periods (for example, "MyApp.BusinessRuleException.InvalidAccount"), ColdFusion can catch the custom type by its character pattern. ColdFusion searches for a cfcatch tag in the cftryblock with a matching exception type, starting with the most specific (the entire string), and ending with the least specific.The "funny" (at my expense) thing here is that not only did I not know that, I had actually wanted

<cfcatch> to work that way, and just ass-u-me`d it didn't so never tried it! Fuckwit.Here's an example:

Wednesday, 29 January 2014

Enhancement suggestion for parseDateTime()

A quick one.

parseDateTime() is used for taking a string and trying to convert it into a date object (as reflected by the date represented by the string). It does a pretty shocking job of it, as demonstrated by the following code:Wednesday, 1 January 2014

CFML: Using Query.cfc doesn't have to be the drama Adobe wants it to be

Yesterday / today I was talking to me mate Adam Tuttle about the drawbacks of ColdFusion's Query.cfc, and using it. Adam's blogged about this ("Script Queries Are Dead; Long Live Script Queries!"), and written a proof of concept of how it could be better done.

This got me thinking about how bad Query.cfc's intended usage needs to be.

I'll start by saying - "repeating", actually, as I will say this to anyone who will listen - what a shitty abomination of an implementation Query.cfc (and its ilk) is. It's the worst implementation of functionality ColdFusion has. It is the nadir of "capability" demonstrated by the Adobe ColdFusion Team. Whoever is responsible for it should be removed from the team, and never be allowed to interfere with our language ever again.

But anyway.

OK, so Adobe's idea of how we should query a database via CFScript is this:

queryService = new query();

queryService.setDatasource("cfdocexamples");

queryService.setName("GetParks");

queryService.setcachedwithin(CreateTimeSpan(0, 6, 0, 0));

queryService.addParam(name="state",value="MD",cfsqltype="cf_sql_varchar");

queryService.addParam(value="National Capital Region",cfsqltype="cf_sql_varchar");

result = queryService.execute(sql="SELECT PARKNAME, REGION, STATE FROM Parks WHERE STATE = :state and REGION = ? ORDER BY ParkName, State ");

GetParks = result.getResult();

That's from the docs. I'm not making that up. No wonder people think it's clumsy. Compare the above to the equivalent <cfquery>:

<cfquery name="GetParks" datasource="cfdocexamples" cachedwithin="#createTimeSpan(0, 6, 0, 0)#">

SELECT PARKNAME, REGION, STATE

FROM Parks

WHERE STATE = <cfqueryparam value="MD" cfsqltype="cf_sql_varchar">

and REGION = <cfqueryparam value="National Capital Region" cfsqltype="cf_sql_varchar">

ORDER BY ParkName

</cfquery>

Monday, 21 October 2013

CFML: Community collaboration: fixing some bugs in my defer() function

I released a UDF

defer() to CFLib and Code Review yesterday. And Adam Tuttle quickly spotted a shortfall in my implementation. And whilst addressing that spotted two more bugs, which I have now fixed.Adam's observation was thus:

you're not doing a thread join in this code anywhere, which means that if the deferred job takes longer than the rest of the request to run, it will not be able to affect the response.

[...]