G'day:

That's probably a fairly enigmatic title if you have not read the preceding article: "Vue.js: using TDD to develop a data-entry form". It's pretty long-winded, but the gist of it is summarised in this copy and pasted tract:

Right so the overall requirement here is (this is copy and pasted from the previous article) to construct an event registration form (personal details, a selection of workshops to register for), save the details to the DB and echo back a success page. Simple stuff. Less so for me given I'm using tooling I'm still only learning (Vue.js, Symfony, Docker, Kahlan, Mocha, MariaDB).

There's been two articles around this work so far:

There's also a much longer series of articles about me getting the Docker environment running with containers for Nginx, Node.js (and Vue.js), MariaDB and PHP 8 running Symfony 5. It starts with "Creating a web site with Vue.js, Nginx, Symfony on PHP8 & MariaDB running in Docker containers - Part 1: Intro & Nginx" and runs for 12 articles.

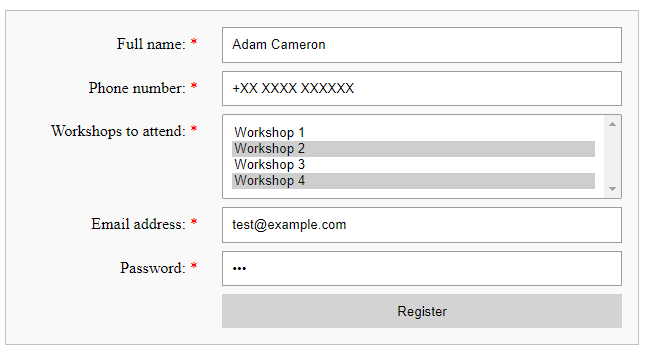

In the previous article I did the UI for the workshop registration form…

… and the summary one gets after submitting one's registration:

Today we're creating the back-end endpoint to fetch the list of workshops in that multiple select, and also another endpoint to save the registration details that have been submitted (ran out of time for this bit). The database we'll be talking to is as follows:

(BTW, dbdiagram.io is a bloody handy website... I just did a mysqldump of my tables (no data), imported it their online charting tool, and... done. Cool).

Now… as for me and Symfony… I'm really only starting out with it. The entirety of my hands-on exposure to it is documented in "Part 6: Installing Symfony" and "Part 7: Using Symfony". And the "usage" was very superficial. I'm learning as I go here.

And as-always: I will be TDDing every step, using a tight cycle of identify a case (eg: "it needs to return a 200-OK status for GET requests on the endpoint /workshops"); create tests for that case; do the implementation code just for that case.

It needs to return a 200-OK status for GET requests on the /workshops endpoint

This is a pretty simple test:

namespace adamCameron\fullStackExercise\spec\functional\Controller;

use adamCameron\fullStackExercise\Kernel;

use Symfony\Component\HttpFoundation\Request;

use Symfony\Component\HttpFoundation\Response;

describe('Tests of WorkshopController', function () {

beforeAll(function () {

$this->request = Request::createFromGlobals();

$this->kernel = new Kernel('test', false);

});

describe('Tests of doGet', function () {

it('needs to return a 200-OK status for GET requests', function () {

$request = $this->request->create("/workshops/", 'GET');

$response = $this->kernel->handle($request);

expect($response->getStatusCode())->toBe(Response::HTTP_OK);

});

});

});

I make a request, I check the status code of the response. That's it. And obviously it fails:

root@58e3325d1a16:/usr/share/fullstackExercise# vendor/bin/kahlan --spec=spec/functional/Controller/workshopController.spec.php --lcov="var/tmp/lcov/coverage.info" --ff

[error] Uncaught PHP Exception Symfony\Component\HttpKernel\Exception\NotFoundHttpException: "No route found for "GET /workshops/"" at /usr/share/fullstackExercise/vendor/symfony/http-kernel/EventListener/RouterListener.php line 136

F 1 / 1 (100%)

Tests of WorkshopController

Tests of doGet

✖ it needs to return a 200-OK status for GET requests

expect->toBe() failed in `.spec/functional/Controller/workshopController.spec.php` line 22

It expect actual to be identical to expected (===).

actual:

(integer) 404

expected:

(integer) 200

Perfect. Now let's add a route. And probably wire it up to a controller class and method I guess. Here's what I've got:

# backend/config/routes.yaml

workshops:

path: /workshops/

controller: adamCameron\fullStackExercise\Controller\WorkshopsController::doGet

// backend/src/Controller/WorkshopsController.php

namespace adamCameron\fullStackExercise\Controller;

use Symfony\Bundle\FrameworkBundle\Controller\AbstractController;

use Symfony\Component\HttpFoundation\JsonResponse;

class WorkshopsController extends AbstractController

{

public function doGet() : JsonResponse

{

return new JsonResponse(null);

}

}

Initially this continued to fail with a 404, but I worked out that Symfony caches a bunch of stuff when it's not in debug mode, so it wasn't seeing the new route and/or the controller until I switched the Kernel obect initialisation to switch debug on:

// from workshopsController.spec.php, as above:

$this->kernel = new Kernel('test', true);

And then the actual code ran. Which is nice:

Passed 1 of 1 PASS in 0.108 seconds (using 8MB)

(from now on I'll just let you know if the tests pass, rather than spit out the output).

Bye Kahlan, hi again PHPUnit

I've been away from this article for an entire day, a lot of which was down to trying to get Kahlan to play nicely with Symfony, and also getting Kahlan's own functionality to not obstruct me from forward progress. However I've hit a wall with some of the bugs I've found with it, specifically "Documented way of disabling patching doesn't work #378" and "Bug(?): Double::instance doesn't seem to cater to stubbing methods with return-types #377". These render Kahlan unusable for me.

The good news is I sniffed around PHPUnit a bit more, and discovered its testdox functionality which allows me to write my test cases in good BDD fashion, and have those show up in the test results. It'll either "rehydrate" a human-readable string from the test name (testTheMethodDoesTheThing becomes "test the method does the thing"), or one can specify an actual case via the @testdox annotation on methods and the classes themselves (I'll show you below, outside this box). This means PHPUnit will achieve what I need, so I'm back to using that.

OK, backing up slightly and switching over to the PHPUnit test (backend/tests/functional/Controller/WorkshopsControllerTest.php):

/**

* @testdox it needs to return a 200-OK status for GET requests

* @covers \adamCameron\fullStackExercise\Controller\WorkshopsController

*/

public function testDoGetReturns200()

{

$this->client->request('GET', '/workshops/');

$this->assertEquals(Response::HTTP_OK, $this->client->getResponse()->getStatusCode());

}

> vendor/bin/phpunit --testdox 'tests/functional/Controller/WorkshopsControllerTest.php' '--filter=testDoGetReturns200'

PHPUnit 9.5.2 by Sebastian Bergmann and contributors.

Tests of WorkshopController

✔ it needs to return a 200-OK status for GET requests

Time: 00:00.093, Memory: 12.00 MB

OK (1 test, 1 assertion)

Generating code coverage report in HTML format ... done [00:00.682]

root@5f9133aa9de3:/usr/share/fullstackExercise#

Cool.

It returns a collection of workshop objects, as JSON

The next case is:

/**

* @testdox it returns a collection of workshop objects, as JSON

*/

public function testDoGetReturnsJson()

{

}

This is a bit trickier, in that I actually need to write some application code now. And wire it into Symfony so it works. And also test it. Via Symfony's wiring. Eek.

Here's a sequence of thoughts:

- We are getting a collection of Workshop objects from [somewhere] and returning them in JSON format.

- IE: that would be a WorkshopCollection.

- The values for the workshops are stored in the DB.

- The WorkshopCollection will need a way of getting the data into itself. Calling some method like loadAll

- That will need to be called by the controller, so the controller will need to receive a WorkshopCollection via Symfony's DI implementation.

- A model class like WorkshopCollection should not be busying itself with the vagaries of storage. It should hand that off to a repository class (see "The Repository Pattern"), which will handle the fetching of DB data and translating it from a recorset to an array of Workshop objects.

- As WorkshopsRepository will contain testable data-translation logic, it will need unit tests. However we don't want to have to hit the DB in our tests, so we will need to abstract the part of the code that gets the data into something we can mock away.

- As we're using Doctrine/DBAL to connect to the database, and I'm a believer in "don't mock what you don't own", we will put a thin (-ish) wrapper around that as WorkshopsDAO. This is not completely "thin" because it will "know" the SQL statements to send to its connector to get the data, and will also "know" the DBAL statements to get the data out and pass back to WorkshopsRepository for modelling.

That seems like a chunk to digest, and I don't want you to think I have written any of this code, but this is the sequence of thoughts that leads me to arrive at the strategy for handling the next case. I think from the first bits of that bulleted list I can derive sort of how the test will need to work. The controller doesn't how this WorkshopCollection gets its data, but it needs to be able to tell it to do it. We'll mock that bit out for now, just so we can focus on the controller code. We will work our way back from the mock in another test. For now we have backend/tests/functional/Controller/WorkshopsControllerTest.php

/**

* @testdox it returns a collection of workshop objects, as JSON

* @covers \adamCameron\fullStackExercise\Controller\WorkshopsController

*/

public function testDoGetReturnsJson()

{

$workshops = [

new Workshop(1, 'Workshop 1'),

new Workshop(2, 'Workshop 2')

];

$this->client->request('GET', '/workshops/');

$resultJson = $this->client->getResponse()->getContent();

$result = json_decode($resultJson, false);

$this->assertCount(count($workshops), $result);

array_walk($result, function ($workshopValues, $i) use ($workshops) {

$workshop = new Workshop($workshopValues->id, $workshopValues->name);

$this->assertEquals($workshops[$i], $workshop);

});

}

To make this pass we need just enough code for it to work:

class WorkshopsController extends AbstractController

{

private WorkshopCollection $workshops;

public function __construct(WorkshopCollection $workshops)

{

$this->workshops = $workshops;

}

public function doGet() : JsonResponse

{

$this->workshops->loadAll();

return new JsonResponse($this->workshops);

}

}

class WorkshopCollection implements \JsonSerializable

{

/** @var Workshop[] */

private $workshops;

public function loadAll()

{

$this->workshops = [

new Workshop(1, 'Workshop 1'),

new Workshop(2, 'Workshop 2')

];

}

public function jsonSerialize()

{

return $this->workshops;

}

}

And thanks to Symphony's dependency-injection service container's autowiring, all that just works, just like that. That's the test for that end point done.

Now there was all thant bumpf I mentioned about repositories and DAOs and connectors and stuff. As part of the refactoring part of this, we are going to push our implementation right back to the DAO. This allows us to complete the parts of the code in the WorkshopCollection, WorkshopsRepository and just mock-out the DAO for now.

class WorkshopCollection implements \JsonSerializable

{

private WorkshopsRepository $repository;

/** @var Workshop[] */

private $workshops;

public function setRepository(WorkshopsRepository $repository) : void

{

$this->repository = $repository;

}

public function loadAll()

{

$this->workshops = $this->repository->selectAll();

}

public function jsonSerialize()

{

return $this->workshops;

}

}

My thinking here is:

- It's going to need a WorkshopsRepository to get stuff from the DB.

- It doesn't seem right to me to pass in a dependency to a model as a constructor arguments. The model should work without needing a DB connection; just the methods around storage interaction should require the repository. On the other hand the only thing the collection does now is to be able to load the stuff from the DB and serialise it, so I'm kinda coding for the future here, and I don't like that. But I'm sticking with it for reasons we'll come to below.

- I also really hate model classes with getters and setters. This is usually a sign of bad OOP. But here I have a setter, to get the repository in there.

The reason (it's not a reason, it's an excuse) I'm not passing in the repo as a constructor argument and instead using a setter is because I wanted to check out how Symfony's service config dealt with the configuration of this. If yer classes all have type-checked constructor args, Symfony just does it all automatically with no code at all (just a config switch). However to handle using the setRepository method I needed a factory method to do so. The config for it is thus (in backend/config/services.yaml):

adamCameron\fullStackExercise\Factory\WorkshopCollectionFactory: ~

adamCameron\fullStackExercise\Model\WorkshopCollection:

factory: ['@adamCameron\fullStackExercise\Factory\WorkshopCollectionFactory', 'getWorkshopCollection']

Simple! And the code for WorkshopCollectionFactory:

class WorkshopCollectionFactory

{

private WorkshopsRepository $repository;

public function __construct(WorkshopsRepository $repository)

{

$this->repository = $repository;

}

public function getWorkshopCollection() : WorkshopCollection

{

$collection = new WorkshopCollection();

$collection->setRepository($this->repository);

return $collection;

}

}

Also very simple. But, yeah, it's an exercise in messing about, and there's no way I should have done this. I should have just used a constructor argument. Anyway, moving on.

The WorkshopsRepository is very simple too:

class WorkshopsRepository

{

private WorkshopsDAO $dao;

public function __construct(WorkshopsDAO $dao)

{

$this->dao = $dao;

}

/** @return Workshop[] */

public function selectAll() : array

{

$records = $this->dao->selectAll();

return array_map(

function ($record) {

return new Workshop($record['id'], $record['name']);

},

$records

);

}

}

I get some records from the DAO, and map them across to Workshop objects. Oh! Workshop:

class Workshop implements \JsonSerializable

{

private int $id;

private string $name;

public function __construct(int $id, string $name)

{

$this->id = $id;

$this->name = $name;

}

public function jsonSerialize()

{

return (object) [

'id' => $this->id,

'name' => $this->name

];

}

}

And lastly I mock WorkshopsDAO. I can't implement any further down the stack of this process because the DAO is what uses the DBAL Connector object, and I don't own that, so if I actually started to use it, I'd be hitting the DB. Or hitting the ether and getting an error. Either way: no good for our test. So a mocked DAO:

class WorkshopsDAO

{

public function selectAll() : array

{

return [

['id' => 1, 'name' => 'Workshop 1'],

['id' => 2, 'name' => 'Workshop 2']

];

}

}

Having done all that refactoring, we check if our test is still good, and it is. I can verify this is not a trick of the light by changing some of that data in the DAO, and watch the test break (which it does). I can also now go back to the test and stick some more code-coverage annotations in:

/**

* @testdox it returns a collection of workshop objects, as JSON

* @covers \adamCameron\fullStackExercise\Controller\WorkshopsController

* @covers \adamCameron\fullStackExercise\Factory\WorkshopCollectionFactory

* @covers \adamCameron\fullStackExercise\Repository\WorkshopsRepository

* @covers \adamCameron\fullStackExercise\Model\WorkshopCollection

* @covers \adamCameron\fullStackExercise\Model\Workshop

*/

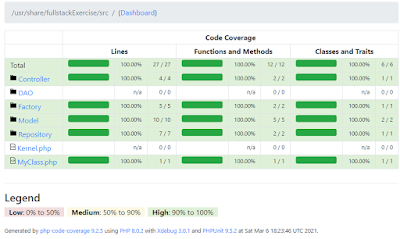

And see that all the code is indeed covered:

It returns the expected workshops from the database

But now we need to implement the real DAO. once we do that, our test will break because the DAO will suddenly start hitting the DB, and we'll be getting back whatever is in the DB, not our expected canned response. Plus we don't want this test to hit the DB anyhow. So first we're gonna mock-out the DAO using PHPUnit's mocks instead of our code-mock. To do this turned out to be a bit tricky, initially, given Symfony's DI container is looking after all the dependencies for us, but fortunately when in test mode, Symfony allows us to hack into that container. I've updated my test, thus:

public function testDoGetReturnsJson()

{

$workshopDbValues = [

['id' => 1, 'name' => 'Workshop 1'],

['id' => 2, 'name' => 'Workshop 2']

];

$this->mockWorkshopDaoInServiceContainer($workshopDbValues);

// ... unchanged ...

array_walk($result, function ($workshopValues, $i) use ($workshopDbValues) {

$this->assertEquals($workshopDbValues[$i], $workshopValues);

});

}

private function mockWorkshopDaoInServiceContainer($returnValue = []): void

{

$mockedDao = $this->createMock(WorkshopsDAO::class);

$mockedDao->method('selectAll')->willReturn($returnValue);

$container = $this->client->getContainer();

$workshopRepository = $container->get('test.WorkshopsRepository');

$reflection = new \ReflectionClass($workshopRepository);

$property = $reflection->getProperty('dao');

$property->setAccessible(true);

$property->setValue($workshopRepository, $mockedDao);

}

We are popping a mocked WorkshopsDAO into the WorkshopsRepository object in the container So when the repo calls it, it'll be calling the mock.

Oh! to be able to access that 'test.WorkshopsRepository' container key, we need to expose it via the services_test.xml container config:

services:

test.WorkshopsRepository:

alias: adamCameron\fullStackExercise\Repository\WorkshopsRepository

public: true

And running that, the test works, and is ignoring the reallyreally DAO.

To test the final DAO implementation, we're gonna do an end-to-end test:

class WorkshopControllerTest extends WebTestCase

{

private KernelBrowser $client;

public static function setUpBeforeClass(): void

{

$dotenv = new Dotenv();

$dotenv->load(dirname(__DIR__, 3) . "/.env.test");

}

protected function setUp(): void

{

$this->client = static::createClient(['debug' => false]);

}

/**

* @testdox it returns the expected workshops from the database

* @covers \adamCameron\fullStackExercise\Controller\WorkshopsController

* @covers \adamCameron\fullStackExercise\Factory\WorkshopCollectionFactory

* @covers \adamCameron\fullStackExercise\Model\WorkshopCollection

* @covers \adamCameron\fullStackExercise\Repository\WorkshopsRepository

* @covers \adamCameron\fullStackExercise\Model\Workshop

*/

public function testDoGet()

{

$this->client->request('GET', '/workshops/');

$response = $this->client->getResponse();

$workshops = json_decode($response->getContent(), false);

/** @var Connection */

$connection = static::$container->get('database_connection');

$expectedRecords = $connection->query("SELECT id, name FROM workshops ORDER BY id ASC")->fetchAll();

$this->assertCount(count($expectedRecords), $workshops);

array_walk($expectedRecords, function ($record, $i) use ($workshops) {

$this->assertEquals($record['id'], $workshops[$i]->id);

$this->assertSame($record['name'], $workshops[$i]->name);

});

}

}

The test is pretty familiar, except it's actually getting its expected data from the database, and making sure the whole process, end to end, is doing what we want. Currently when we run this it fails because we still have our mocked DAO in place (the real mock, not the… mocked mock. Um. You know what I mean: the actual DAO class that just returns hard-coded data). Now we put the proper DAO code in:

class WorkshopsDAO

{

private Connection $connection;

public function __construct(Connection $connection)

{

$this->connection = $connection;

}

public function selectAll() : array

{

$sql = "

SELECT

id, name

FROM

workshops

ORDER BY

id ASC

";

$statement = $this->connection->executeQuery($sql);

return $statement->fetchAllAssociative();

}

}

And now if we run the tests:

> vendor/bin/phpunit --testdox

PHPUnit 9.5.2 by Sebastian Bergmann and contributors.

Tests of WorkshopController

✔ it needs to return a 200-OK status for GET requests

✔ it returns a collection of workshop objects, as JSON

Tests of baseline Symfony install

✔ it displays the Symfony welcome screen

✔ it returns a personalised greeting from the /greetings end point

PHP config tests

✔ gdayWorld.php outputs G'day world!

Webserver config tests

✔ It serves gdayWorld.html with expected content

End to end tests of WorkshopController

✔ it returns the expected workshops from the database

Tests that code coverage analysis is operational

✔ It reports code coverage of a simple method correctly

Time: 00:00.553, Memory: 22.00 MB

OK (8 tests, 26 assertions)

Generating code coverage report in HTML format ... done [00:00.551]

root@5f9133aa9de3:/usr/share/fullstackExercise#

Nice one!

And if we look at code coverage:

I was gonna try to cover the requirements for the process ot save the form fields in the article too, but it took ages to work out how some of the Symfony stuff worked, plus I sunk about a day into trying to get Kahlan to work, and this article is already super long anyhow. I now have the back-end processing sorted out to update the front-end form to actually use the values from the DB instead of test values. I might look at that tomorrow (see "TDDing the reading of data from a web service to populate elements of a Vue JS component" for that exercise). I need a rest from Symfony.

It needs to drink some beer

I'm rushing the outro of this article because I am supposed to be on a webcam with a beer in my hand in 33min, and I need to proofread this still…

Righto.

--

Adam