G'day:

Being back in CFML land, I spend a lot of time using trycf.com to write sample code. Sometimes I just want to see how ColdFusion and Lucee behave differently in a given situation. Sometimes I'm "helping" someone on the CFML Slack channel, and want to give them a runnable example of what I think they might want to be doing. trycf.com is cool, but I usually want to write my example code as a series of tests demonstrating variants of its behaviour. I also generally want to TDD even sample code that I write, so I know I'm staying on-point and the code works without any surprises. trycf.com is a bit basic, one can only really have one CFML script and run that. One cannot import other libraries like TestBox so I can write my code the way I want.

Quite often I write a little stub tester to help me, and I find myself doing this over and over again, and I never think to save these stubs across examples. Plus having to include the stubs along with the sample code I'm writing clutters things a bit, and also means my example code doesn't really stick to the Single Responsibility Principle.

I found out from Abram - owner of trycf.com - that one can specify a setupCodeGistId in the trycf.com URL, and it will load a CFML script from that and pre-include that before the scratch-pad code. This is bloody handy, and armed with this knowledge I decided I was gonna knock together a minimal single-file testing framework which I could include transparently in with a scratch-pad file, so that scratch-pad file could focus on the sample code I'm writing, and the testing of the same.

A slightly hare-brained idea I have had when doing this is to TDD the whole exercise… and using the test framework to test itself as it evolves. Obviously to start with the test will need to be separate pieces of code before the framework is usable, but beyond a point it should work enough for me to be able to use it to test the latter stages of its development. Well: we'll see, anyhow.

Another challenge I am setting for myself is that I'm gonna mimic the "syntax" of TestBox, so that the tests I write in a scratch-pad should be able to be lifted-out as-is and dumped into a TestBox test spec CFC. Obviously I'm not going to reimplement all of TestBox, just a subset of stuff to be able to do simple tests. I think I will need to implement the following functions:

-

void run() - well, like in TestBox all my tests will be implemented in a function called run, and then one runs that to execute the tests. This is just convention rather than needing any development.

-

void describe(required string label, required function testGroup) - this will output its label and call its testGroup.

-

void it(required string label, required function implementation) - this will output its label and call its implementation. I'm pretty sure the implementation of describe and it will be identical. I mean like I will use two references to the same function to implement this.

-

struct expect(required any actual) - this will take the actual value being tested, and return a struct containing a matcher to that actual value.

-

boolean toBe(required any expected) - this will take the value that the actual value passed to expect should be. This will be as a key in the struct returned by expect (this will emulate the function-chaining TestBox uses with expect(x).toBe(y).

If I create that minimalist implementation, then I will be able to write this much of a test suite in a trycf.com scratch pad:

function myFunctionToTest(x) {

// etc

}

function run() {

describe("Tests for myFunctionToTest" ,() => {

it("tests some variant", () => {

expect(myFunctionToTest("variant a")).toBe("something")

})

it("tests some other variant", () => {

expect(myFunctionToTest("variant b")).toBe("something else")

})

})

}

run()

And everything in that run function will be compatible with TestBox.

I am going to show every single iteration of the process here, to demonstrate TDD in action. This means this article will be long, and it will be code-heavy. And it will have a lot of repetition. I'll try to keep my verbiage minimal, so if I think the iteration of the code speaks for itself, I possibly won't have anything to add.

Let's get on with it.

It runs the tests via a function "run"

<cfscript>

// tests

try {

run()

writeOutput("OK")

} catch (any e) {

writeOutput("run function not found")

}

</cfscript>

<cfscript>

// implementation

</cfscript>

I am going to do this entire implementation in a trycf.com scratch-pad file. As per above, I have two code blocks: tests and implementation. For each step I will show you the test (or updates to existing tests), and then I will show you the implementation. We can take it as a given that the test will fail in the way I expect (which will be obvious from the code), unless I state otherwise. As a convention a test will output "OK" if it passed, or "Failure: " and some error explanation if not. Later these will be baked into the framework code, but for now, it's hand-cranked. This shows that you don't need any framework to design your code via TDD: it's a practice, it's not a piece of software.

When I run the code above, I get "Failure: run function not found". This is obviously because the run function doesn't even exist yet. Let's address that.

// implementation

void function run(){

}

Results: OK

We have completed one iteration of red/green. There is nothing to refactor yet. Onto the next iteration.

It has a function describe

We already have some of our framework operational. We can put tests into that run function, and they'll be run: that's about all that function needs to do. Hey I didn't say this framework was gonna be complicated. In fact the aim is for it to be the exact opposite of complicated.

// tests

// ...

void function run(){

try {

describe()

writeOutput("OK")

} catch (any e) {

writeOutput("Failure: describe function not found")

}

}

// implementation

void function describe(){

}

The tests already helped me here actually. In my initial effort at implementing describe, I misspelt it as "desribe". Took me a few seconds to spot why the test failed. I presume, like me, you have found a function with a spelling mistake in it that has escaped into production. I was saved that here, by having to manually type the name of the function into the test before I did the first implementation.

describe takes a string parameter label which is displayed on a line by itself as a label for the test grouping

// tests

// ...

savecontent variable="testOutput" {

describe("TEST_DESCRIPTION")

};

if (testOutput == "TEST_DESCRIPTION<br>") {

writeOutput("OK<br>")

return

}

writeOutput("Failure: label not output<br>")

// implementation

void function describe(required string label){

writeOutput("#label#<br>")

}

This implementation passes its own test (good), but it makes the previous test break:

try {

describe()

writeOutput("OK")

} catch (any e) {

writeOutput("Failure: describe function not found")

}

We require describe to take an argument now, so we need to update that test slightly:

try {

describe("NOT_TESTED")

writeOutput("OK")

} catch (any e) {

writeOutput("Failure: describe function not found")

}

I make a point of being very clear when arguments etc I need to use are not part of the test.

It's also worth noting that the test output is a bit of a mess at the moment:

OKNOT_TESTED

OKOK

For the sake of cosmetics, I've gone through and put <br> tags on all the test messages I'm outputting, and I've also slapped a cfsilent around that first describe test, as its output is just clutter. The full implementation is currently:

// tests

try {

cfsilent() {

run()

}

writeOutput("OK<br>")

} catch (any e) {

writeOutput("Failure: run function not found<br>")

}

void function run(){

try {

cfsilent(){describe("NOT_TESTED")}

writeOutput("OK<br>")

} catch (any e) {

writeOutput("Failure: describe function not found<br>")

}

savecontent variable="testOutput" {

describe("TEST_DESCRIPTION")

};

if (testOutput == "TEST_DESCRIPTION<br>") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: label not output<br>")

}

}

run()

</cfscript>

<cfscript>

// implementation

void function describe(required string label){

writeOutput("#label#<br>")

}

And now the output is tidier:

OK

OK

OK

I actually did a double-take here, wondering why the TEST_DESCRIPTION message was not displaying for that second test: the one where I'm actually testing that that message displays. Of course it's because I've got the savecontent around it, so I'm capturing the output, not letting it output. Duh.

describe takes a callback parameter testGroup which is is executed after the description is displayed

savecontent variable="testOutput" {

describe("TEST_DESCRIPTION", () => {

writeOutput("DESCRIBE_GROUP_OUTPUT")

})

};

if (testOutput == "TEST_DESCRIPTION<br>DESCRIBE_GROUP_OUTPUT") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: testGroup not executed<br>")

}

void function describe(required string label, required function testGroup){

writeOutput("#label#<br>")

testGroup()

}

This implementation change broke earlier tests that called describe, because they were not passing a testGroup argument. I've updated those to just slung an empty callback into those calls, eg:

describe("TEST_DESCRIPTION", () => {})

That's all I really need from describe, really. But I'll just do one last test to confirm that it can be nested OK (there's no reason why it couldn't be, but I'm gonna check anyways.

describe calls can be nested

savecontent variable="testOutput" {

describe("OUTER_TEST_DESCRIPTION", () => {

describe("INNER_TEST_DESCRIPTION", () => {

writeOutput("DESCRIBE_GROUP_OUTPUT")

})

})

};

if (testOutput == "OUTER_TEST_DESCRIPTION<br>INNER_TEST_DESCRIPTION<br>DESCRIBE_GROUP_OUTPUT") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: describe nested did not work<br>")

}

And this passes without any adjustment to the implementation, as I expected. Note that it's OK to write a test that just passes, if there's not then a need to make an implementation change to make it pass. One only needs a failing test before one makes an implementation change. Remember the tests are to test that the implementation change does what it's supposed to. This test here is just a belt and braces thing, and to be declarative about some functionality the system has.

The it function behaves the same way as the describe function

it will end up having more required functionality than describe needs, but there's no reason for them to not just be aliases of each other for the purposes of this micro-test-framework. As long as the describe alias still passes all its tests, it doesn't matter what extra functionality I put into it to accommodate the requirements of it.

savecontent variable="testOutput" {

describe("TEST_DESCRIPTION", () => {

it("TEST_CASE_DESCRIPTION", () => {

writeOutput("TEST_CASE_RESULT")

})

})

};

if (testOutput == "TEST_DESCRIPTION<br>TEST_CASE_DESCRIPTION<br>TEST_CASE_RESULT") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: the it function did not work<br>")

}

it = describe

it will not error-out if an exception occurs in its callback, instead reporting an error result, with the exception's message

Tests are intrinsically the sort of thing that might break, so we can't have the test run stopping just cos an exception occurs.

savecontent variable="testOutput" {

describe("NOT_TESTED", () => {

it("tests an exception", () => {

throw "EXCEPTION_MESSAGE";

})

})

};

if (testOutput CONTAINS "tests an exception<br>Error: EXCEPTION_MESSAGE<br>") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: the it function did not correctly report the test error<br>")

}

void function describe(required string label, required function testGroup) {

try {

writeOutput("#label#<br>")

testGroup()

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

At this point I decided I didn't like how I was just using describe to implement it, so I refactored:

void function runLabelledCallback(required string label, required function callback) {

try {

writeOutput("#label#<br>")

callback()

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

void function describe(required string label, required function testGroup) {

runLabelledCallback(label, testGroup)

}

void function it(required string label, required function implementation) {

runLabelledCallback(label, implementation)

}

Because everything is test-covered, I am completely safe to just make that change. Now I have the correct function signature on describe and it, and an appropriately "general" function to do the actual execution. And the tests all still pass, so that's cool. Reminder: refactoring doesn't need to start with a failing test. Intrinsically it's an activity that mustn't impact the behaviour of the code being refactored, otherwise it's not a refactor. Also note that one does not ever alter implementation and refactor at the same time. Follow red / green / refactor as separate steps.

it outputs "OK" if the test ran correctly

// <samp>it</samp> outputs OK if the test ran correctly

savecontent variable="testOutput" {

describe("NOT_TESTED", () => {

it("outputs OK if the test ran correctly", () => {

// some test here... this actually starts to demonstrate an issue with the implementation, but we'll get to that

})

})

};

if (testOutput CONTAINS "outputs OK if the test ran correctly<br>OK<br>") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: the it function did not correctly report the test error<br>")

}

The implementation of this demonstrated that describe and it can't share an implementation. I don't want describe calls outputting "OK" when their callback runs OK, and this was what started happening when I did my first pass of the implementation for this:

void function runLabelledCallback(required string label, required function callback) {

try {

writeOutput("#label#<br>")

callback()

writeOutput("OK<br>")

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

Only it is a test, and only it is supposed to say "OK". This is an example of premature refactoring, and probably going overboard with DRY. The describe function wasn't complex, so we were gaining nothing by de-duping it from it, especially when it's implementation will have more complexity still to come.

I backed out my implementation and my failing test for a moment, and did another refactor to separate-out the two functions completely. I know I'm good when all my tests still pass.

void function describe(required string label, required function testGroup) {

writeOutput("#label#<br>")

testGroup()

}

void function it(required string label, required function implementation) {

try {

writeOutput("#label#<br>")

implementation()

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

I also needed to update the baseline it test to expect the "OK<br>". After that everything is green again, and I can bring my new test back (failure as expected), and implementation (all green again/still):

void function it(required string label, required function implementation) {

try {

writeOutput("#label#<br>")

implementation()

writeOutput("OK<br>")

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

describe will not error-out if an exception occurs in its callback, instead reporting an error result, with the exception's message

Back to describe again. It too needs to not error-out if there's an issue with its callback, so we're going to put in some handling for that again too. This test is largely copy and paste from the equivalent it test:

savecontent variable="testOutput" {

describe("TEST_DESCRIPTION", () => {

throw "EXCEPTION_MESSAGE";

})

};

if (testOutput CONTAINS "TEST_DESCRIPTION<br>Error: EXCEPTION_MESSAGE<br>") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: the it function did not correctly report the test error<br>")

}

void function describe(required string label, required function testGroup) {

try {

writeOutput("#label#<br>")

testGroup()

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

it outputs "Failed" if the test failed

This took some thought. How do I know a test "failed"? In TestBox when something like expect(true).toBeFalse() runs, it exits from the test immediately, and does not run any further expectations the test might have. Clearly it's throwing an exception from toBeFalse if the actual value (the one passed to expect) isn't false. So this is what I need to do. I have not written any assertions or expectations yet, so for now I'll just test with an actual exception. It can't be any old exception, because the test could break for any other reason too, so I need to differentiate between a test fail exception (the test has failed), and any other sort of exception (the test errored). I'll use a TestFailedException!

savecontent variable="testOutput" {

describe("NOT_TESTED", () => {

it("outputs failed if the test failed", () => {

throw(type="TestFailedException");

})

})

};

if (testOutput.reFind("Failed<br>$")) {

writeOutput("OK<br>")

}else{

writeOutput("Failure: the it function did not correctly report the test failure<br>")

}

void function it(required string label, required function implementation) {

try {

writeOutput("#label#<br>")

implementation()

writeOutput("OK<br>")

} catch (TestFailedException e) {

writeOutput("Failed<br>")

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

This is probably enough now to a) start using the framework for its own tests; b) start working on expect and toBe.

has a function expect

it("has a function expect", () => {

expect()

})

Check. It. Out. I'm actually able to use the framework now. I run this and I get:

has a function expect

Error: Variable EXPECT is undefined.

And when I do the implementation:

function expect() {

}

has a function expect

OK

I think once I'm tidying up, I might look to move the test result to be on the same line as the label. We'll see. For now though: functionality.

expect returns a struct with a key toBe which is a function

it("expect returns a struct with a key toBe which is a function", () => {

var result = expect()

if (isNull(result) || isNull(result.toBe) || !isCustomFunction(local.result.toBe)) {

throw(type="TestFailedException")

}

})

I'd like to be able to just use !isCustomFunction(local?.result?.toBe) in that if statement there, but Lucee has a bug that prevents ?. to be used in a function expression (I am not making this up, go look at LDEV-3020). Anyway, the implementation for this for now is easy:

function expect() {

return {toBe = () => {}}

}

toBe returns true if the actual and expected values are equal

it("toBe returns true if the actual and expected values are equal", () => {

var actual = "TEST_VALUE"

var expected = "TEST_VALUE"

result = expect(actual).toBe(expected)

if (isNull(result) || !result) {

throw(type="TestFailedException")

}

})

The implementation for this bit is deceptively easy:

function expect(required any actual) {

return {toBe = (expected) => {

return actual.equals(expected)

}}

}

toBe throws a TestFailedException if the actual and expected values are not equal

it("toBe throws a TestFailedException if the actual and expected values are not equal", () => {

var actual = "ACTUAL_VALUE"

var expected = "EXPECTED_VALUE"

try {

expect(actual).toBe(expected)

} catch (TestFailedException e) {

return

}

throw(type="TestFailedException")

})

function expect(required any actual) {

return {toBe = (expected) => {

if (actual.equals(expected)) {

return true

}

throw(type="TestFailedException")

}}

}

And with that… I have a very basic testing framework. Obviously it could be improved to have more expectations (toBeTrue, toBeFalse, toBe{Type}), and could have some nice messages on the toBe function so a failure is more clear as to what went on, but for a minimum viable project, this is fine. I'm going to do a couple more tests / tweaks though.

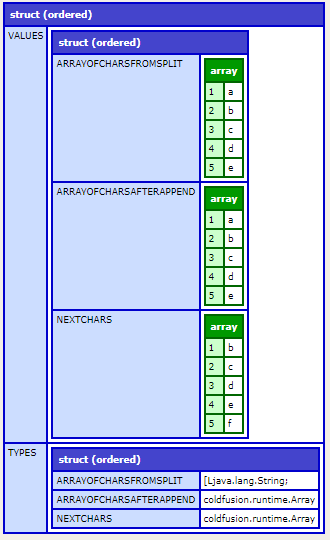

toBe works with a variety of data types

var types = ["string", 0, 0.0, true, ["array"], {struct="struct"}, queryNew(""), xmlNew()]

types.each((type) => {

it("works with #type.getClass().getName()#", (type) => {

expect(type).toBe(type)

})

})

The results here differ between Lucee:

works with java.lang.String

OK

works with java.lang.Double

OK

works with java.lang.Double

OK

works with java.lang.Boolean

OK

works with lucee.runtime.type.ArrayImpl

OK

works with lucee.runtime.type.StructImpl

OK

works with lucee.runtime.type.QueryImpl

OK

works with lucee.runtime.text.xml.struct.XMLDocumentStruct

Failed

And ColdFusion:

works with java.lang.String

OK

works with java.lang.Integer

OK

works with coldfusion.runtime.CFDouble

Failed

works with coldfusion.runtime.CFBoolean

OK

works with coldfusion.runtime.Array

OK

works with coldfusion.runtime.Struct

OK

works with coldfusion.sql.QueryTable

OK

works with org.apache.xerces.dom.DocumentImpl

Failed

But the thing is the tests actually work on both platforms. If you compare the objects outside the context of the test framework, the results are the same. Apparently in ColdFusion 0.0 does not equal itself. I will be taking this up with Adobe, I think.

It's good to know that it works OK for structs, arrays and queries though.

The it function puts the test result on the same line as the test label, separated by a colon

As I mentioned above, I'd gonna take the <br> out from between the test message and the result, instead just colon-separating them:

// The <samp>it</samp> function puts the test result on the same line as the test label, separated by a colon

savecontent variable="testOutput" {

describe("TEST_DESCRIPTION", () => {

it("TEST_CASE_DESCRIPTION", () => {

writeOutput("TEST_CASE_RESULT")

})

})

};

if (testOutput == "TEST_DESCRIPTION<br>TEST_CASE_DESCRIPTION: TEST_CASE_RESULTOK<br>") {

writeOutput("OK<br>")

}else{

writeOutput("Failure: the it function did not work<br>")

}

void function it(required string label, required function implementation) {

try {

writeOutput("#label#<br>")

writeOutput("#label#: ")

implementation()

writeOutput("OK<br>")

} catch (TestFailedException e) {

writeOutput("Failed<br>")

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

That's just a change to that line before calling implementation()

Putting it to use

I can now save the implementation part of this to a gist, save another gist with my actual tests in it, and load that into trycf.com with the setupCodeGistId param pointing to my test framework Gist: https://trycf.com/gist/c631c1f47c8addb2d9aa4d7dacad114f/lucee5?setupCodeGistId=816ce84fd991c2682df612dbaf1cad11&theme=monokai. And… (sigh of relief, cos I'd not tried this until now) it all works.

Update 2022-05-06

That gist points to a newer version of this work. I made a breaking change to how it works today, but wanted the link here to still work.

Outro

This was a long one, but a lot of it was really simple code snippets, so hopefully it doesn't overflow the brain to read it. If you'd like me to clarify anything or find any bugs or I've messed something up or whatever, let me know. Also note that whilst it'll take you a while to read, and it took me bloody ages to write, if I was just doing the actual TDD exercise it's only about an hour's effort. The red/green/refactor cycle is very short.

Oh! Speaking of implementations. I never showed the final product. It's just this:

void function describe(required string label, required function testGroup) {

try {

writeOutput("#label#<br>")

testGroup()

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

void function it(required string label, required function implementation) {

try {

writeOutput("#label#: ")

implementation()

writeOutput("OK<br>")

} catch (TestFailedException e) {

writeOutput("Failed<br>")

} catch (any e) {

writeOutput("Error: #e.message#<br>")

}

}

struct function expect(required any actual) {

return {toBe = (expected) => {

if (actual.equals(expected)) {

return true

}

throw(type="TestFailedException")

}}

}

That's a working testing framework. I quite like this :-)

All the code for the entire exercise - including all the test code - can be checked-out on trycf.com.

Righto.

--

Adam