G'day

You might have noticed I spend a bit of my time encouraging people to use TDD, or at the very least making sure yer code is tested somehow. But use TDD ;-)

OK, so I rattled out a quick article a few days ago - "TDD & professionalism: a brief follow-up to Thoughts on Working Code podcast's Testing episode" - which revisits some existing ground and by-and-large is not relevant to what I'm going to say here, other than the "TDD & professionalism" being why I bang on about it so much. And you might think I bang on about it here, but I also bang on about it at work (when I have work I mean), and in my background conversations too. I try to limit it to only my technical associates, that said.

Right so Mingo hit me up in a comment on that article, asking this question:

Something I ran into was needing to access the external API for the tests and I understand that one usually uses mocking for that, right? But, my question is then: how do you then **know** that you're actually calling the API correctly? Should I build the error handling they have in their API into my mocked up API as well (so I can test my handling of invalid inputs)? This feels like way too much work. I chose to just call the API and use a test account on there, which has it's own issues, because that test account could be setup differently than the multiple different live ones we have. I guess I should just verify my side of things, it's just that it's nice when it's testing everything together.

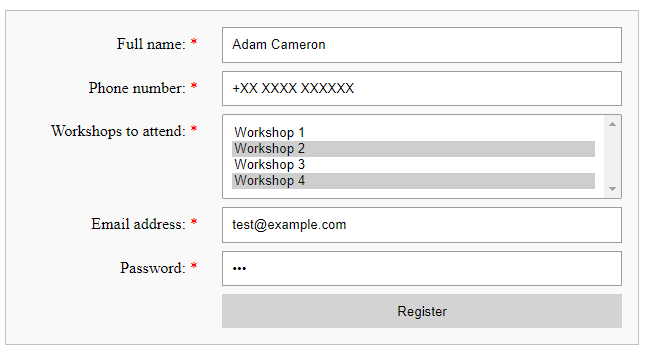

Yep, good question. With new code, my approach to the TDD is based on the public interface doing what's been asked of it. One can see me working through this process in my earlier article "Symfony & TDD: adding endpoints to provide data for front-end workshop / registration requirements". Here I'm building a web service end point - by definition the public interface to some code - and I am always hitting the controller (via the routing). And whatever I start testing, I just "fake it until I make it". My first test case here is "It needs to return a 200-OK status for GET requests on the /workshops endpoint", and the test is this:

/**

* @testdox it needs to return a 200-OK status for successful GET requests

* @covers \adamCameron\fullStackExercise\Controller\WorkshopsController

*/

public function testDoGetReturns200()

{

$this->client->request('GET', '/workshops/');

$this->assertEquals(Response::HTTP_OK, $this->client->getResponse()->getStatusCode());

}

To get this to pass, the first iteration of the implementation code is just this:

public function doGet() : JsonResponse

{

return new JsonResponse(null);

}

The next case is "It returns a collection of workshop objects, as JSON", implemented thus:

/**

* @testdox it returns a collection of workshop objects, as JSON

* @covers \adamCameron\fullStackExercise\Controller\WorkshopsController

*/

public function testDoGetReturnsJson()

{

$workshops = [

new Workshop(1, 'Workshop 1'),

new Workshop(2, 'Workshop 2')

];

$this->client->request('GET', '/workshops/');

$resultJson = $this->client->getResponse()->getContent();

$result = json_decode($resultJson, false);

$this->assertCount(count($workshops), $result);

array_walk($result, function ($workshopValues, $i) use ($workshops) {

$workshop = new Workshop($workshopValues->id, $workshopValues->name);

$this->assertEquals($workshops[$i], $workshop);

});

}

And the code to make it work shows I've pushed the mocking one level back into the application:

class WorkshopsController extends AbstractController

{

private WorkshopCollection $workshops;

public function __construct(WorkshopCollection $workshops)

{

$this->workshops = $workshops;

}

public function doGet() : JsonResponse

{

$this->workshops->loadAll();

return new JsonResponse($this->workshops);

}

}

class WorkshopCollection implements \JsonSerializable

{

/** @var Workshop[] */

private $workshops;

public function loadAll()

{

$this->workshops = [

new Workshop(1, 'Workshop 1'),

new Workshop(2, 'Workshop 2')

];

}

public function jsonSerialize()

{

return $this->workshops;

}

}

(I've skipped a step here… the first iteration could/should be to mock the data right there in the controller, and then refactor it into the model, but this isn't about refactoring, it's about mocking).

From here I refactor further, so that instead of having the data itself in loadAll, the WorkshopCollection calls a repository, and the repository calls a DAO, which for now ends up being:

class WorkshopsDAO

{

public function selectAll() : array

{

return [

['id' => 1, 'name' => 'Workshop 1'],

['id' => 2, 'name' => 'Workshop 2']

];

}

}

The next step is where Mingo's question comes in. The next refactor is to swap out the mocked data for a DB call. We'll end up with this:

class WorkshopsDAO

{

private Connection $connection;

public function __construct(Connection $connection)

{

$this->connection = $connection;

}

public function selectAll() : array

{

$sql = "

SELECT

id, name

FROM

workshops

ORDER BY

id ASC

";

$statement = $this->connection->executeQuery($sql);

return $statement->fetchAllAssociative();

}

}

But wait. if we do that, our unit tests will be hitting the DB. Which we are not gonna do. We've run out of things to directly mock as we're at the lower-boundary of our application, and the connection object is "someon else's code" (Doctrine/DBAL in this case). We can't mock that, but fortunately this is why I have the DAO tier. It acts as the frontier between our app and the external service provider, and we still mock that:

public function testDoGetReturnsJson()

{

$workshopDbValues = [

['id' => 1, 'name' => 'Workshop 1'],

['id' => 2, 'name' => 'Workshop 2']

];

$this->mockWorkshopDaoInServiceContainer($workshopDbValues);

// ... unchanged ...

array_walk($result, function ($workshopValues, $i) use ($workshopDbValues) {

$this->assertEquals($workshopDbValues[$i], $workshopValues);

});

}

private function mockWorkshopDaoInServiceContainer($returnValue = []): void

{

$mockedDao = $this->createMock(WorkshopsDAO::class);

$mockedDao->method('selectAll')->willReturn($returnValue);

$container = $this->client->getContainer();

$workshopRepository = $container->get('test.WorkshopsRepository');

$reflection = new \ReflectionClass($workshopRepository);

$property = $reflection->getProperty('dao');

$property->setAccessible(true);

$property->setValue($workshopRepository, $mockedDao);

}

We just use a mocking library (baked into PHPUnit in this case) to create a runtime mock, and we put that into our repository.

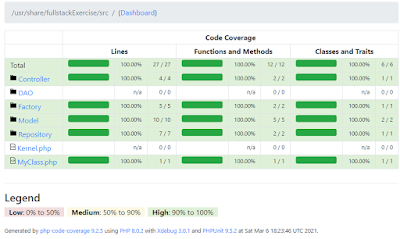

The tests pass, the DB is left alone, and the code is "complete" so we can push it to production perhaps. But we are not - as Mingo observed - actually testing that what we are asking the DB to do is being done. Because all our tests mock the DB part of things out.

The solution is easy, but it's not done via a unit test. It's done via an integration test (or end-to-end test, or acceptance test or whatever you wanna call it), which hits the real endpoint which queries the real database, and gets the real data. Adjacent to that in the test we hit the DB directly to fetch the records we're expecting, and then we compare the JSON that the end point returns represents the same data we manually fetched from the DB. This tests the SQL statement in the DAO, that the data fetched models OK in the repo, and that the model (WorkshopCollection here) applies whatever business logic is necessary to the data from the repo before passing it back to the controller to return with the response, which was requested via the external URL. IE: it tests end-to-end.

public function testDoGetExternally()

{

$client = new Client([

'base_uri' => 'http://fullstackexercise.backend/'

]);

$response = $client->get('workshops/');

$this->assertEquals(Response::HTTP_OK, $response->getStatusCode());

$workshops = json_decode($response->getBody(), false);

/** @var Connection */

$connection = static::$container->get('database_connection');

$expectedRecords = $connection->query("SELECT id, name FROM workshops ORDER BY id ASC")->fetchAll();

$this->assertCount(count($expectedRecords), $workshops);

array_walk($expectedRecords, function ($record, $i) use ($workshops) {

$this->assertEquals($record['id'], $workshops[$i]->id);

$this->assertSame($record['name'], $workshops[$i]->name);

});

}

Note that despite I'm saying "it's not a unit test, it's an integration test", I'm still implementing it via PHPUnit. The testing framework should just provide testing functionality: it should not dictate what kind of testing you implement with it. And similarly not all tests written with PHPUnit are unit tests. They are xUnit style tests, eg: in a class called SomethingTest, and the the methods are prefixed with test and use assertion methods to implement the test constraints.

Also: why don't I just use end-to-end tests then? They seem more reliable? Yep they are. However they are also more fiddly to write as they have more set-up / tear-down overhead, so they take longer to write. Also they generally take longer to run, and given TDD is supposed to be a very quick cadence of test / run / code / run / refactor / run, the less overhead the better. The slower your tests are, the more likely you are to switch to writing code and testing later once you need to clear your head. In the mean time your code design has gone out the window. Also unit tests are more focused - addressing only a small part of the codebase overall - and that has merit in itself. Aso I used a really really trivial example here, but some end-to-end tests are really very tricky to write, given the overall complexity of the functionality being tested. I've been in the lucky place that at my last gig we had a dedicated QA development team, and they wrote the end-to-end tests for us, but this also meant that those tests were executed after the dev considered the tasks "code complete", and QA ran the tests to verify this. There is no definitive way of doing this stuff, that said.

To round this out, I'm gonna digress into another chat I had with Mingo yesterday:

Normally I'd say this:

- Unit tests

- Test logic of one small part of the code (maybe a public method in one class). If you were doing TDD and about to add a condition into your logic, you'd write a until test to cover the new expectations that the condition brings to the mix.

- Functional tests

- These are a subset of unit tests which might test a broader section of the application, eg from the public frontier of the application (so like an endpoint) down to where the code leaves the system (to a logger, or a DB, or whatever). The difference between unit tests and functional tests - to me - are just how distributed the logic being tests is throughout the system.

- Integration tests

- Test that the external connections all work fine. So if you use the app's DB configuration, the correct database is usable. I'd personally consider a test an integration test if it only focused on a single integration.

- Acceptance tests(or end-to-end tests)

- Are to integration tests what functional tests are to unit tests: a broader subset. That test above is an end-to-end test, it tests the web server, the application and the DB.

And yes I know the usages of these terms vary a bit.

Furthermore, considering the distinction between BDD and TDD:

- The BDD part is the nicely-worded case labels, which in theory (but seldom in practise, I find) are written in direct collaboration with the client user.

- The TDD part is when in the design-phase they are created: with TDD it's before the implementation is written; I am not sure whether in BDD it matters or is stipulated.

- But both of them are design / development strategies, not testing strategies.

- The tests can be implemented as any sort of test, not specifically unit tests or functional tests or end-to-end tests. The point is the test defines the design of the piece of code being written: it codifies the expectations of the behaviour of the code.

- BDD and TDD tests are generally implemented via some unit testing framework, be it xUnit (testMyMethodDoesSomethingRight), or Jasmine-esque (it("does something right", function (){}).

One can also do testing that is not TDD or BDD, but it's a less than ideal way of going about things, and I would image result in subpar tests, fragmented test coverage, and tests that don't really help understand the application, so are harder to maintain in a meaningful way. But they are still better than no tests at all.

When I am designing my code, I use TDD, and I consider my test cases in a BDD-ish fashion (except I do it on the client's behalf generally, and sadly), and I use PHPUnit (xUnit) to do so on PHP, and Mocha (Jasime-esque) to do so on Javascript.

Hopefully that clarifies some things for people. Or people will leap at me and tell me where I'm wrong, and I can learn the error in my ways.

Righto.

--

Adam