G'day:

(Long time no see, etc)

The other day on the CFML Slack channel, whilst being in a pedantic mood, I pointed out to my mate John Whish (OG CFMLer) that he was using the term "singleton" when all he really meant was "an object that is reused". A brief chat in the thread and in DM ensued, and was all good. We both revisited the docs on the Singleton Pattern, and refreshed & improved our underatanding of it, and were better for it. Cool. The end. Short article.

...

..

Then the "well ackshually" crowd showed up, and engaged in a decreasingly meritorious diatribe in how there's no difference between a class that implements the Singleton Pattern, and an object that one happens to decide to reuse: they're both just singletons.

OK so the reasoning wasn't quite that daft (well from one quarter, anyhow), but the positioning was missing a degree of nuance, and there was too much doubling down in the "I'm right" dept that I just gave up: fine fellas, you do you. In the meantime I was still talking to John in DM, and I mentioned I was now keen to see how an actual singleton might come together in CFML, so there was likely a blog article ensuing. I predicted CFML would throw up some barriers to doing this smoothly, which is interesting to me; and hopefully other readers - if I have any still? - can improve their understanding of the design pattern.

I'll put to bed the "debate" first.

The notion of a singleton comes from the Singleton Pattern, which is one of the perennial GoF Design Patterns book. It's a specific technique to achieve an end goal.

What's the end goal? Basically one of code organisation around the notion that some objects are intended to be re-used, and possibly even more strongly: must be re-used in the context of the application they are running in. One should not have more than one of these objects in play. An obvious, oft-cited, and as it turns out: unhelpful, example might be a Logger. Or a DatabaseConnection. One doesn't need to create and initialise a new DatabaseConnection object every time one wants to talk to the DB: one just wants to get on with it. If one needed to instantiate the DatabaseConnection every time it was used, the code gets unwieldy, breaks the Single Responsibility Principle, and is prone to error. Here's a naïve example:

numbers = new DatabaseConnection({

host = "localhost",

port = "3306",

user = "root",

password = "123", // eeeeek

database = "myDb"

}.execute("SELECT * FROM numbers"))

One does not wanna be doing all that every time one wants to make a call to the DB. It means way too much code is gonna need to know minutiae of how to connect to the DB. One will quickly point out the deets don't need to be inline like that (esp the password!), and can be in variables. But then you still need to pass the DB credentials about the place.

There's plenty of ways to solve this, but the strategy behind the Singleton Pattern is to create a class that controls the usage of itself, such that when an instance is called for, the calling code always gets the same instance. The key bit here is a class that controls the usage of itself[…] always[…] the same instance. That is what defines a singleton.

My derision in the Slack conversation was that the other participants were like "yeah but one can do that with a normal object just by only creating one of them and reusing it (muttermutterDIcontainer)". Yes. Absolutely. One can def do that, and often it's exactly what is needed. And DI containers are dead useful! But that's "a normal object […] and reusing it". Not a singleton. Words have frickin meanings(*). I really dunno why this is hard to grasp.

It's like someone pointing to white vase with blue art work on it, and going "this is my Ming vase". And when one then points out it says "IKEA" on the bottom, they go "doesn't matter. White with blue detail, and can put flowers in it. That's what a Ming vase is, for all intensive purposes (sic)". "You know a Ming vase is a specific sort of vase, right?". "DOESN'T MATTER STILL HOLDS FLOWERS". OK mate.

Ah well.

Anyhoo, can I come up with a singleton implementation in CFML?

The first step of this didn't go well:

// Highlander.cfc

component {

private Highlander function init() {

throw "should not be runnable"

}

}

// test.cfm

connor = new Highlander()

writeDump(connor)

I'd expect an exception here, but both ColdFusion and Lucee just ignore the init method, and I get an object. Sigh.

This is easily worked-around, but already my code is gonna need to be less idiomatic than I'd like it to be:

public Highlander function init() {

throw "Cannot be instantiated directly. Use getInstance"

}

Now I get the exception.

Next I start working on the getInstance method:

public static Highlander function getInstance() {

return createObject("Highlander")

}

// test.cfm

connor = Highlander::getInstance()

writeDump(connor)

This still returns a new instance of the object every time, but it's simply a first step. To easily show whether instances are the same or different, I'm gonna given them an ID:

// Highlander.cfc

component {

variables.id = createUuid()

public Highlander function init() {

throw "Cannot be instantiated directly. Use getInstance"

}

public static Highlander function getInstance() {

return createObject("Highlander")

}

public string function getId() {

return variables.id

}

}

// test.cfm

connor = Highlander::getInstance()

writeDump(connor)

goner = Highlander::getInstance()

writeDump([

connor = connor.getId(),

goner = goner.getId()

])

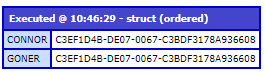

See how the IDs are different: they're different objects.

We solve this by making getInstance only create the object instance once for the life of the class (not the object: the class).

public static Highlander function getInstance() {

static.instance = isNull(static.instance)

? createObject("Highlander")

: static.instance

return static.instance

}

It checks if there's already an instance of itself that it's created before. If so: return it. If not, create and store the instance, and then return it.

Now we get better results from the test code:

Now it's the same ID. Note that this is not isolated to that request: it sticks for every request for the life of the class (which is usually the life of the JVM, or until the class needs to be recompiled). I'm altering my writeDump call slightly:

writeDump(

label = "Executed @ #now().timeFormat('HH:mm:ss')#",

var = [

connor = connor.getId(),

goner = goner.getId()

]

)

The ID sticks across requests. It's not until I restarts my ColdFusion container that the static class object is recreated, and I get a new ID:

One flaw in this implementation is that there's nothing to stop the calling code using createObject rather than using new to try to create an instance of the object. EG: this "works":

// test2.cfm

connor = createObject("Highlander")

goner = createObject("Highlander")

writeDump(

label = "Executed @ #now().timeFormat('HH:mm:ss')#",

var = [

connor = connor.getId(),

goner = goner.getId()

]

)

When I say this "works" I am setting the bar very low, in that "it doesn't error": it's not how the Highlander class is intended to be used though.

Oh: in case it's not clear why there's no exception here: it's cos when one uses createObject, the init method is not automatically called.

Can I guard against this?

Sigh.

OK, on Lucee I can do this with minimal fuss:

// Highlander.cfc

component {

if (static?.getInstanceUsed !== true) {

throw "Cannot be instantiated directly. Use getInstance"

}

variables.id = createUuid()

public Highlander function init() {

throw "Cannot be instantiated directly. Use getInstance"

}

public static Highlander function getInstance() {

static.getInstanceUsed = true

static.instance = isNull(static.instance)

? createObject("Highlander")

: static.instance

static.getInstanceUsed = false

return static.instance

}

public string function getId() {

return variables.id

}

}

What's going on here? The conceit is that the class's pseudo-constructor code is only executed during object creation, and when we are creating an object via getInstance we disable the "safety" in the pseudo-constructor, but then re-enable it once we're done creating the instance. if we don't use getInstance, then the safety has either never been set - exception - or it's been set to false by an erstwhile call to getInstance - also exception.

Looking at that code, I can see that there's a race-condition potential with getInstance's setting/unsetting of the safety, so in the real world that code should be locked.

As I alluded to above: this code does not work in ColdFusion, because ColdFusion has a bug in that the pseudo-constructor is run even when running static methods, so the call to getInstance incorrectly calls the pseudo-constructor code, and triggers the safety check. Sigh. I can't be arsed coming up with a different way to work around this just for ColdFusion. I will raise a bug with them though (TBC). ColdFusion also has another bug in that static?.getInstanceUsed !== true errors if static.getInstance is null, as the === doesn't like it. I guess I'll raise that too (also TBC).

So. There we go. A singleton implementation.

PHP's OOP is more mature than CFML's, so a PHP implementation of this is a bit more succinct/elegant:

class Highlander {

private ?string $id;

private static self $instance;

private function __construct() {

$this->id = uniqid();

}

public function getId() : string {

return $this->id;

}

public static function getInstance() : static {

self::$instance = self::$instance ?? new static();

return static::$instance;

}

}

$connor = Highlander::getInstance();

$goner = Highlander::getInstance();

var_dump([

'connor' => $connor->getId(),

'goner' => $goner->getId()

]);

$transient = new Highlander();

array(2) {

["connor"]=>

string(13) "66d457f9d4cd1"

["goner"]=>

string(13) "66d457f9d4cd1"

}

Fatal error: Uncaught Error: Call to private Highlander::__construct() from global scope in /home/user/scripts/code.php:30

Stack trace:

#0 {main}

thrown in /home/user/scripts/code.php on line 30

And that's that.

Righto.

--

Adam